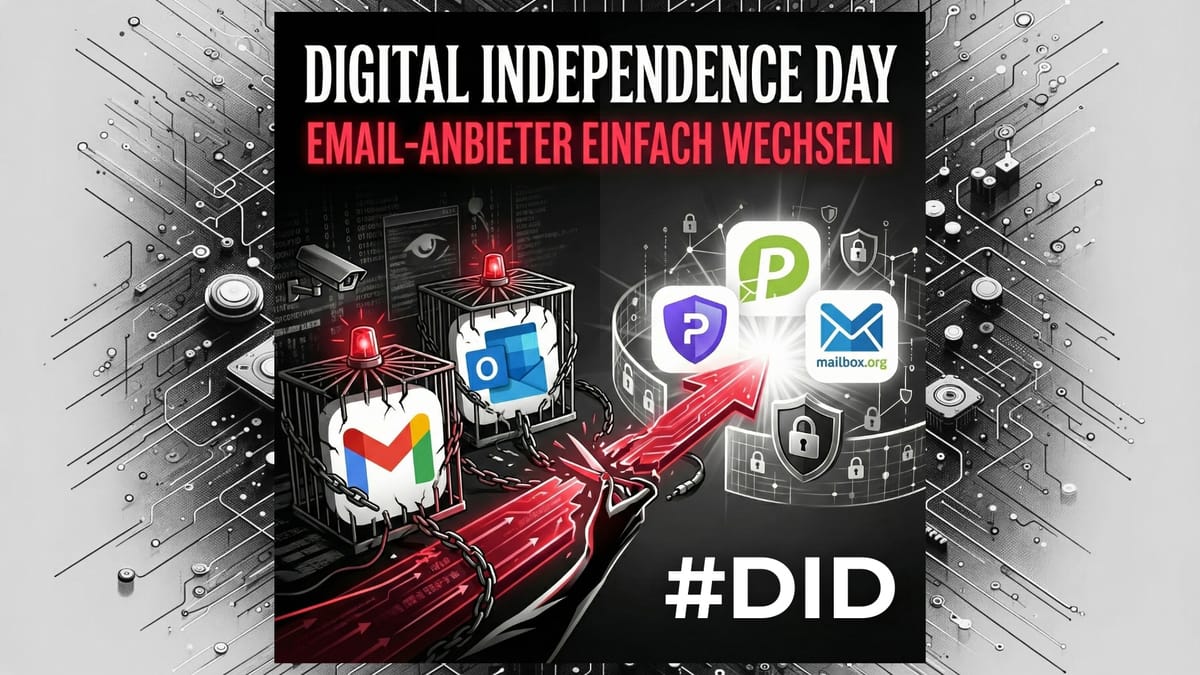

I sent my colleagues (volunteering) an email saying that my GMail account would explode next week. Unfortunately, I was forced to use GMail because of that job only.

I defiantly included this link:

https://tuta.com/fr/blog/degoogle-list

Thank you, @Tutanota for the best text on the subject I could ever find in French! And for the mail account.

#Google #GMail #EuropeanAlternatives #activism #boycottUSA #dataSecurity #degoogle