AI "artists". Nothing like a hard days work for those guys. 🧑🎨

#fuckai #noai #fuckaimusic #fuckaiart #aimusic #music #humanart #ai #aiart #humans #artist #musicians #art #creativity #creative #original #innovation #hardwork

AI "artists". Nothing like a hard days work for those guys. 🧑🎨

#fuckai #noai #fuckaimusic #fuckaiart #aimusic #music #humanart #ai #aiart #humans #artist #musicians #art #creativity #creative #original #innovation #hardwork

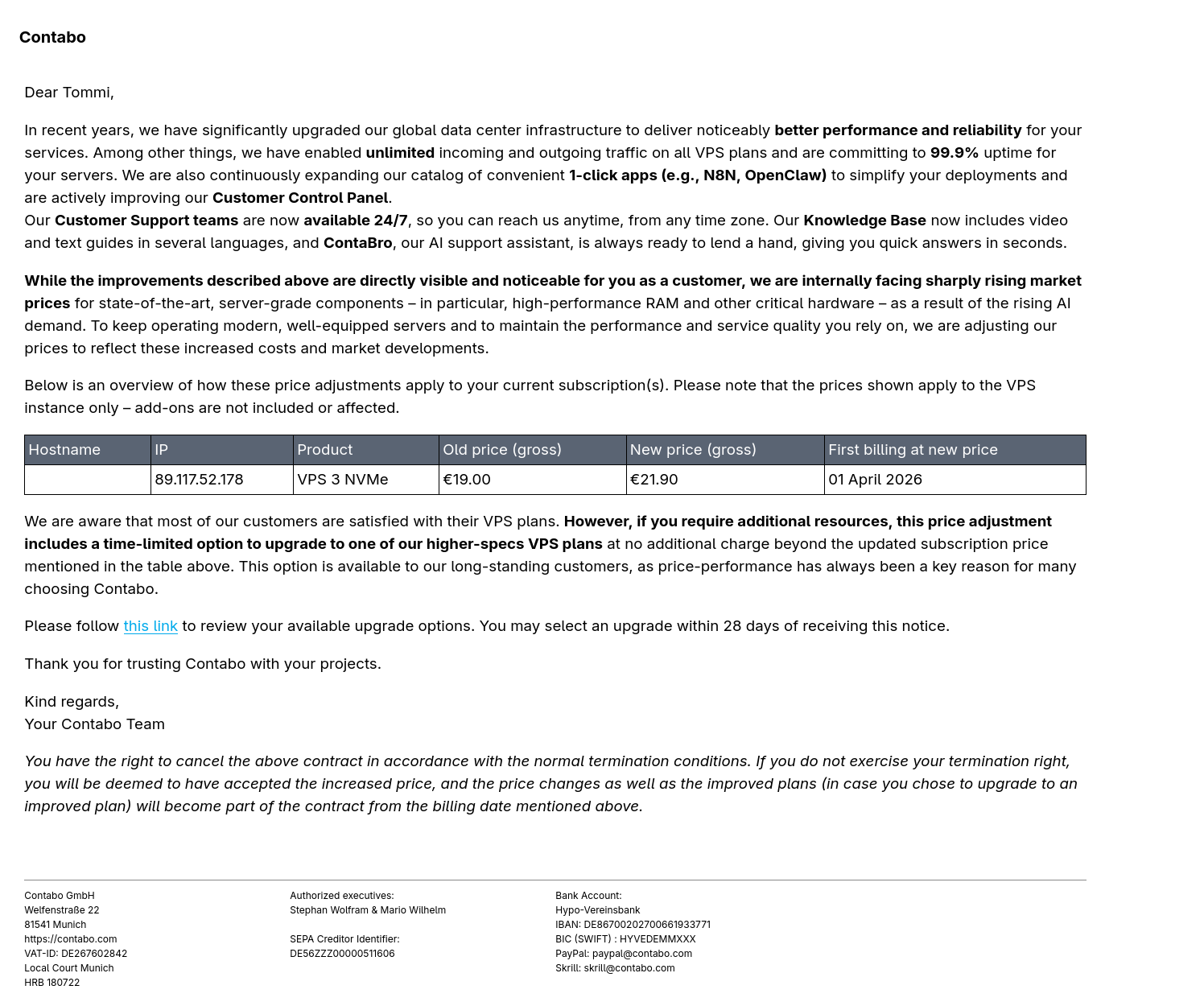

No AI in email! ✅

That's what we at Tuta stand for - and #Microsoft has just proven why it was a good decision.

😡 Check how Copilot even accessed confidential emails: ➡️ https://tuta.com/blog/microsoft-copilot-reads-confidential-business-mail

🔗 https://stephvee.ca/blog/updates/my-rss-feed-is-now-excerpt-only/

After thinking more about the worsening data theft /scraping problem, I've decided to strip my RSS feed of full-text content.  I did not make this decision lightly, and am frankly incredibly annoyed about it. We shouldn't have to worry about this sort of stuff...

I did not make this decision lightly, and am frankly incredibly annoyed about it. We shouldn't have to worry about this sort of stuff...

🔗 https://stephvee.ca/blog/updates/my-rss-feed-is-now-excerpt-only/

After thinking more about the worsening data theft /scraping problem, I've decided to strip my RSS feed of full-text content.  I did not make this decision lightly, and am frankly incredibly annoyed about it. We shouldn't have to worry about this sort of stuff...

I did not make this decision lightly, and am frankly incredibly annoyed about it. We shouldn't have to worry about this sort of stuff...

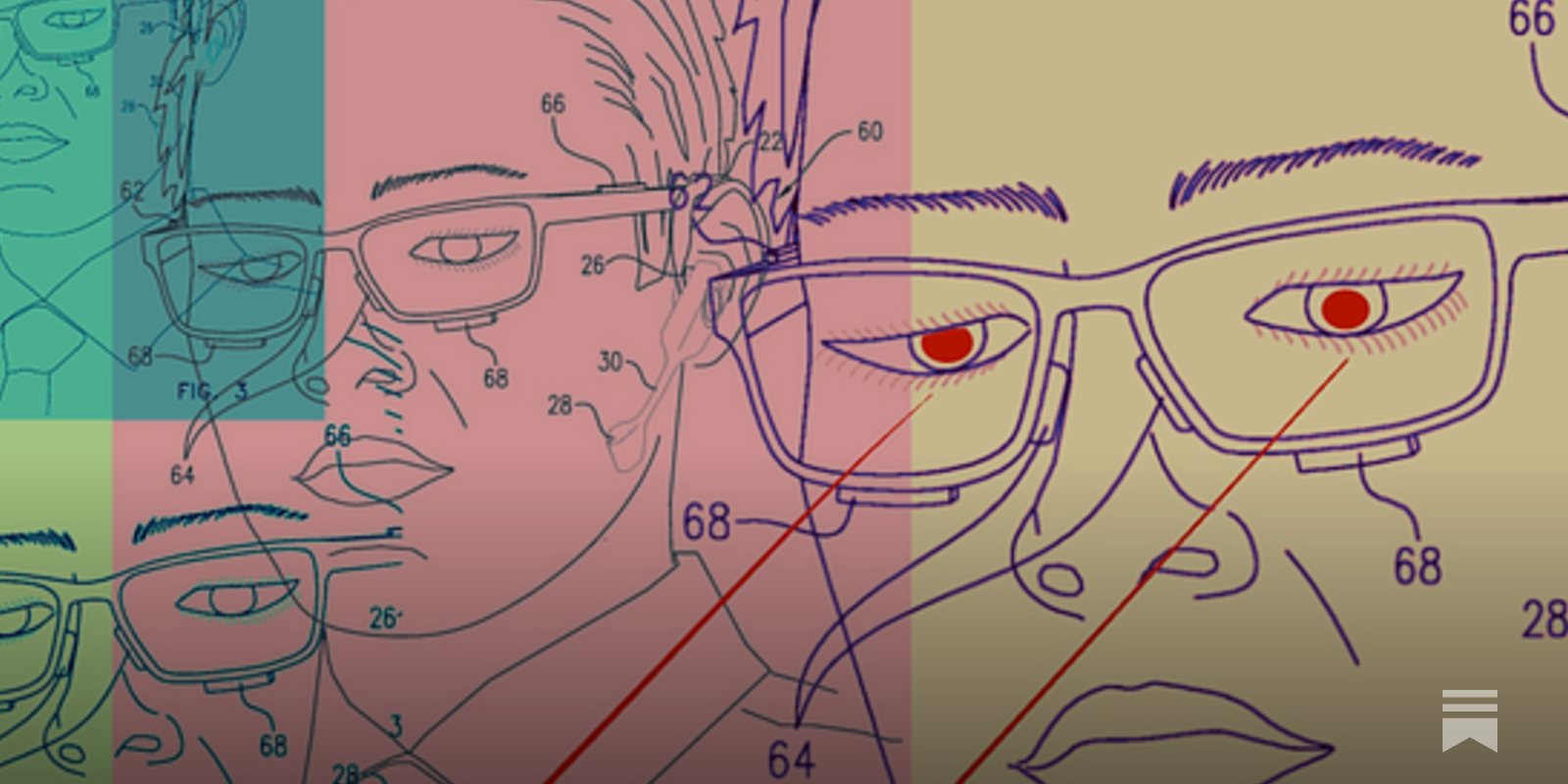

Christ on wheels, every time I think I've got a handle on the darkness of the world 😧

Apple just paid $2bn for an Israeli tech company with no revenues. The company builds infrared sensors to map imperceptible facial movements that determine the words you're thinking. 30% of its staff were called up to do genocide.

https://www.donotpanic.news/p/apple-just-bought-a-sinister-pre

Christ on wheels, every time I think I've got a handle on the darkness of the world 😧

Apple just paid $2bn for an Israeli tech company with no revenues. The company builds infrared sensors to map imperceptible facial movements that determine the words you're thinking. 30% of its staff were called up to do genocide.

https://www.donotpanic.news/p/apple-just-bought-a-sinister-pre

regarding the "AI hit piece" post going around: it's put me past a tipping point. I don't think the tech is inherently evil and I don't buy half of what anti-genAI zealots say, but I've now turned the corner to thinking it's time to fight big-tech "AI" by any means necessary to drag out their schemes until the torrent of venture capital implodes. the current trajectory needs to be arrested, and megacorps pushing this shit need to be put back on their asses. #genAI #generativeAI #AIslop #fuckAI

FINISHED IT. #AI #Tech #technology #embroidery #Handmade #FuckAI

Art by Bread Nugent. This one's for sale here: https://breadnugent.bigcartel.com/product/oh-deer

Art by Bread Nugent. This one's for sale here: https://breadnugent.bigcartel.com/product/oh-deer