"The old way of thinking about how to make #socialplatforms safer was that you had to make them do more #contentmoderation.

But by the mid-2020s, almost everyone knew both adults & children who struggled to regulate their usage of apps and suffered as a result.

Regulators & plaintiffs’ attorneys began new investigations into whether a #socialapp might be held liable not for what people said on it, but rather how it worked.

Increasingly, it appears they will."

#Section230

https://www.platformer.news/social-media-addiction-trial-eu-tiktok-investigation/

"The old way of thinking about how to make #socialplatforms safer was that you had to make them do more #contentmoderation.

But by the mid-2020s, almost everyone knew both adults & children who struggled to regulate their usage of apps and suffered as a result.

Regulators & plaintiffs’ attorneys began new investigations into whether a #socialapp might be held liable not for what people said on it, but rather how it worked.

Increasingly, it appears they will."

#Section230

https://www.platformer.news/social-media-addiction-trial-eu-tiktok-investigation/

We're now seeing an outpouring of TikTok "refugees" flee to UpScrolled after new U.S. owners employ more censorship.

I hope that folks now understand how incredibly short-sighted it was for the Biden administration to give the executive branch new authority to buy anything foreign so long as it is a threat to the magic words "national security". It doesn't matter who is in power, a law that vague should never have passed.

We're now seeing an outpouring of TikTok "refugees" flee to UpScrolled after new U.S. owners employ more censorship.

I hope that folks now understand how incredibly short-sighted it was for the Biden administration to give the executive branch new authority to buy anything foreign so long as it is a threat to the magic words "national security". It doesn't matter who is in power, a law that vague should never have passed.

Mods wanted!!

Kolektiva.social has now been around for nearly five years. During that time, we have received lots of valuable feedback. It has and continues to help us better understand problems with our moderation, and what needs to change. A clear takeaway from the issues we've come up against is that we need more help with content moderation.

Over the past several months, It's become more evident than ever that our movements require autonomous social media networks. To be blunt, if we want Kolektiva (and the Fediverse more broadly) to continue to grow in the face of cyberlibertarian co-optation, we need more people to help out. Developing the Fediverse as an alternative, autonomous social network involves more than just using its free, open source, decentralized infrastructure as a simple substitute to surveillance capitalist platforms. It also takes shared responsibility and thoughtful, human centered moderation. As anarchists, we view content moderation through the lens of mutual aid: it is a form of collective care that enhances the quality of collective information flows and means of communication. Mutual aid is premised on working together to expand solidarity and build movements. It is about sharing time, attention, and responsibility. Stepping up to support with moderation means helping to maintain community standards, and to keep our space grounded in the values we share.

Corporate social media platforms do not operate on the principle of mutual aid. They operate on the basis of profit — mining their users for data, that they can process and sell to advertisers. Neither do the moderators of these social media platforms operate on the principle of mutual aid. They do these difficult and often brutal jobs because they are paid a wage out of the revenue brought in from advertisers. Kolektiva's moderation team consists of volunteers. If we want to do social media differently, it requires a shift in the service user/service provider mentality. It requires more people to step up, so that the burden of moderation is shared more equitably, and so that the moderation team is enriched by more perspectives.

If you join the Kolektiva moderation team, you’ll be part of a collective that spans several continents and brings different experiences and politics into conversation. Additionally, you'll build skills in navigating conflict and disagreement — skills that are valuable to our movements outside the Fediverse.

Of course, we know that not everyone can volunteer their time. We want to mention that there are plenty of ways to contribute: flag posts, report bugs and share direct feedback. We are grateful for everyone who has taken the time to do this and has discussed and engaged with us directly.

Since launching in 2020, Kolektiva has grown beyond what we ever expected. While our goal has never been to become massive, we value our place as a landing spot into the Fediverse for many — and a home base for some.

In addition to expanding our content moderation team, we have other plans in the works. These include starting a blog and developing educational materials to support people who want to create their own instances.

If you value Kolektiva, please consider joining the Kolektiva content moderation team!

Contact us at if you’re interested or have questions.

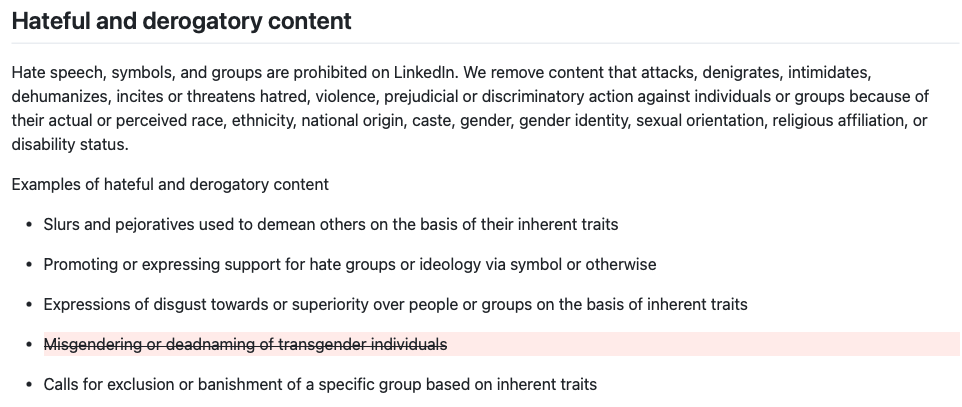

🏳️⚧️ LinkedIn removes #HateSpeech protections for #transgender individuals

@opentermsarchive detected on July 29th that LinkedIn removed transgender-related protections from its policy on hateful and derogatory content. The platform no longer lists “misgendering or deadnaming of transgender individuals” as examples of prohibited conduct.

👉 Full memo and sources: https://opentermsarchive.org/en/memos/linkedin-removes-transgender-hate-speech-protections/

🇫🇷 Memo in French: https://opentermsarchive.org/fr/memos/linkedin-retire-des-protections-contre-les-discours-de-haine-envers-les-personnes-transgenres/

#TermsSpotting #TrustAndSafety#LGBTQ#ContentModeration #transrights

"Wikipedia editors just adopted a new policy to help them deal with the slew of AI-generated articles flooding the online encyclopedia. The new policy, which gives an administrator the authority to quickly delete an AI-generated article that meets a certain criteria, isn’t only important to Wikipedia, but also an important example for how to deal with the growing AI slop problem from a platform that has so far managed to withstand various forms of enshittification that have plagued the rest of the internet."

https://www.404media.co/wikipedia-editors-adopt-speedy-deletion-policy-for-ai-slop-articles/

"Wikipedia editors just adopted a new policy to help them deal with the slew of AI-generated articles flooding the online encyclopedia. The new policy, which gives an administrator the authority to quickly delete an AI-generated article that meets a certain criteria, isn’t only important to Wikipedia, but also an important example for how to deal with the growing AI slop problem from a platform that has so far managed to withstand various forms of enshittification that have plagued the rest of the internet."

https://www.404media.co/wikipedia-editors-adopt-speedy-deletion-policy-for-ai-slop-articles/

Substack Sends Push Alert for Nazi Newsletter

#NaziNewsletter#PushAlert#AccidentalDistribution#ContentModeration#OnlineCommunity

https://gizmodo.com/substack-sends-push-alert-for-nazi-newsletter-2000636663

Substack Sends Push Alert for Nazi Newsletter

#NaziNewsletter#PushAlert#AccidentalDistribution#ContentModeration#OnlineCommunity

https://gizmodo.com/substack-sends-push-alert-for-nazi-newsletter-2000636663

🏳️⚧️ LinkedIn removes #HateSpeech protections for #transgender individuals

@opentermsarchive detected on July 29th that LinkedIn removed transgender-related protections from its policy on hateful and derogatory content. The platform no longer lists “misgendering or deadnaming of transgender individuals” as examples of prohibited conduct.

👉 Full memo and sources: https://opentermsarchive.org/en/memos/linkedin-removes-transgender-hate-speech-protections/

🇫🇷 Memo in French: https://opentermsarchive.org/fr/memos/linkedin-retire-des-protections-contre-les-discours-de-haine-envers-les-personnes-transgenres/

#TermsSpotting #TrustAndSafety#LGBTQ#ContentModeration #transrights

Mods wanted!!

Kolektiva.social has now been around for nearly five years. During that time, we have received lots of valuable feedback. It has and continues to help us better understand problems with our moderation, and what needs to change. A clear takeaway from the issues we've come up against is that we need more help with content moderation.

Over the past several months, It's become more evident than ever that our movements require autonomous social media networks. To be blunt, if we want Kolektiva (and the Fediverse more broadly) to continue to grow in the face of cyberlibertarian co-optation, we need more people to help out. Developing the Fediverse as an alternative, autonomous social network involves more than just using its free, open source, decentralized infrastructure as a simple substitute to surveillance capitalist platforms. It also takes shared responsibility and thoughtful, human centered moderation. As anarchists, we view content moderation through the lens of mutual aid: it is a form of collective care that enhances the quality of collective information flows and means of communication. Mutual aid is premised on working together to expand solidarity and build movements. It is about sharing time, attention, and responsibility. Stepping up to support with moderation means helping to maintain community standards, and to keep our space grounded in the values we share.

Corporate social media platforms do not operate on the principle of mutual aid. They operate on the basis of profit — mining their users for data, that they can process and sell to advertisers. Neither do the moderators of these social media platforms operate on the principle of mutual aid. They do these difficult and often brutal jobs because they are paid a wage out of the revenue brought in from advertisers. Kolektiva's moderation team consists of volunteers. If we want to do social media differently, it requires a shift in the service user/service provider mentality. It requires more people to step up, so that the burden of moderation is shared more equitably, and so that the moderation team is enriched by more perspectives.

If you join the Kolektiva moderation team, you’ll be part of a collective that spans several continents and brings different experiences and politics into conversation. Additionally, you'll build skills in navigating conflict and disagreement — skills that are valuable to our movements outside the Fediverse.

Of course, we know that not everyone can volunteer their time. We want to mention that there are plenty of ways to contribute: flag posts, report bugs and share direct feedback. We are grateful for everyone who has taken the time to do this and has discussed and engaged with us directly.

Since launching in 2020, Kolektiva has grown beyond what we ever expected. While our goal has never been to become massive, we value our place as a landing spot into the Fediverse for many — and a home base for some.

In addition to expanding our content moderation team, we have other plans in the works. These include starting a blog and developing educational materials to support people who want to create their own instances.

If you value Kolektiva, please consider joining the Kolektiva content moderation team!

Contact us at if you’re interested or have questions.