Gary Marcus is onto something in here. Maybe true AGI is not so impossible to reach after all. Just probably not in the near future but likely within 20 years.

"For all the efforts that OpenAI and other leaders of deep learning, such as Geoffrey Hinton and Yann LeCun, have put into running neurosymbolic AI, and me personally, down over the last decade, the cutting edge is finally, if quietly and without public acknowledgement, tilting towards neurosymbolic AI.

This essay explains what neurosymbolic AI is, why you should believe it, how deep learning advocates long fought against it, and how in 2025, OpenAI and xAI have accidentally vindicated it.

And it is about why, in 2025, neurosymbolic AI has emerged as the team to beat.

It is also an essay about sociology.

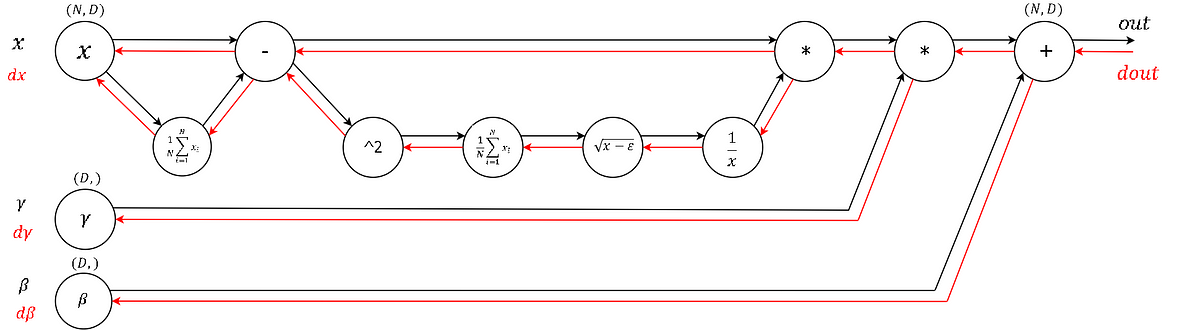

The essential premise of neurosymbolic AI is this: the two most common approaches to AI, neural networks and classical symbolic AI, have complementary strengths and weaknesses. Neural networks are good at learning but weak at generalization; symbolic systems are good at generalization, but not at learning."

https://garymarcus.substack.com/p/how-o3-and-grok-4-accidentally-vindicated

#AI#NeuralNetworks#DeepLearning#SymbolicAI#NeuroSymbolicAI#AGI