Join the workshop at #39C3!

NEW DATE: day 4, TODAY at 13:40 @ Free Knowledge Habitat Workshop Area.

Most people and organisations have their very own way of acquiring, organising, archiving, sharing, and collaborating on knowledge repositories. A broad spectrum of opinions and approaches resulted in a diverse and rich ecosystem of knowledge management solutions. Nevertheless, this also implies scattered and disconnected knowledge sources. What would it mean to build bridges among wikis and federate knowledge?

This workshop is going to be heavily centred on a twofold discussion, exploring the challenge of federated knowledge starting from two questions.

- What does it mean to federate knowledge repositories?

- Instead of pursuing a silver-bullet solution to embrace all use-cases, what would it mean to foster and enable interoperability for different software?

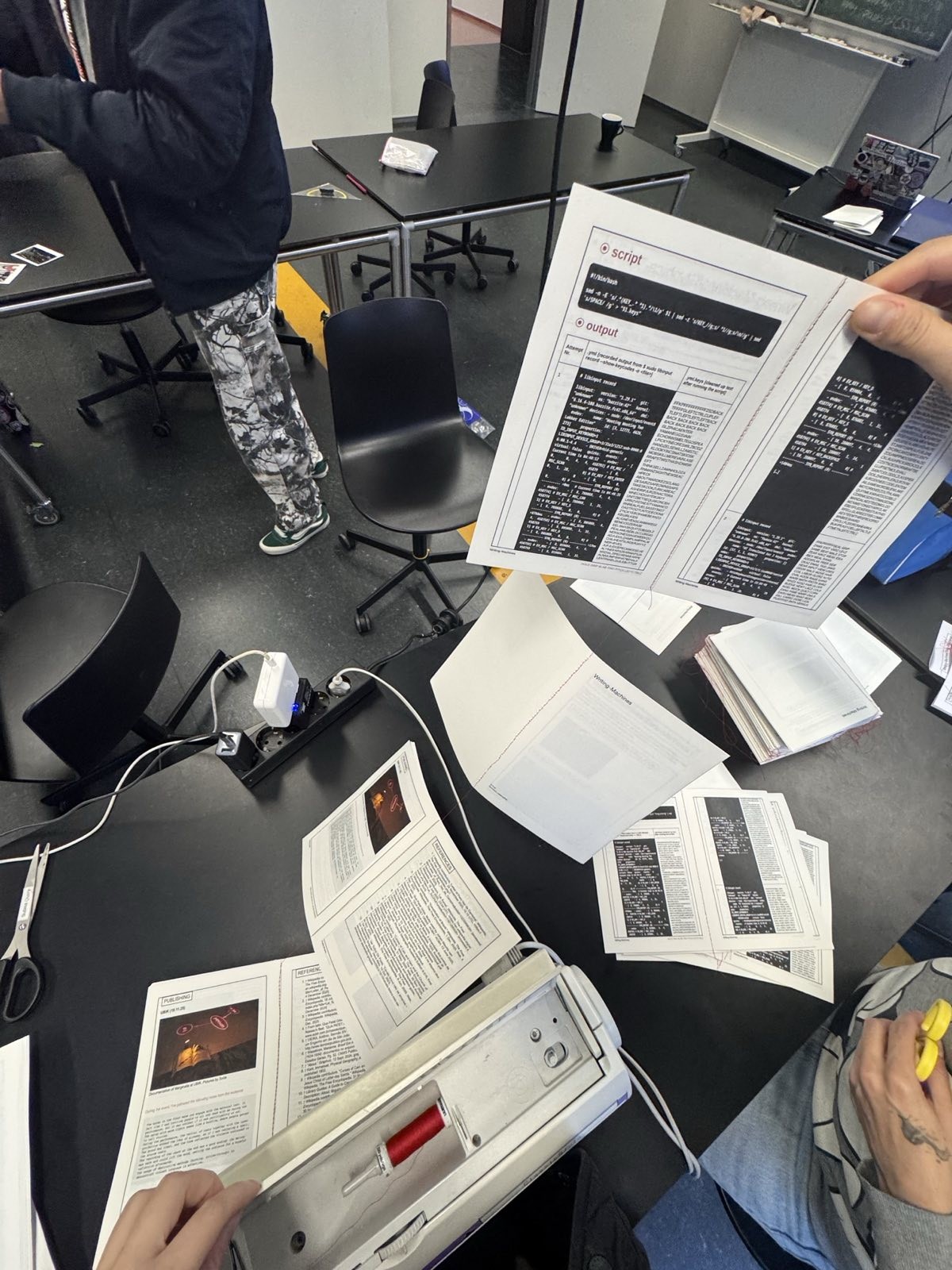

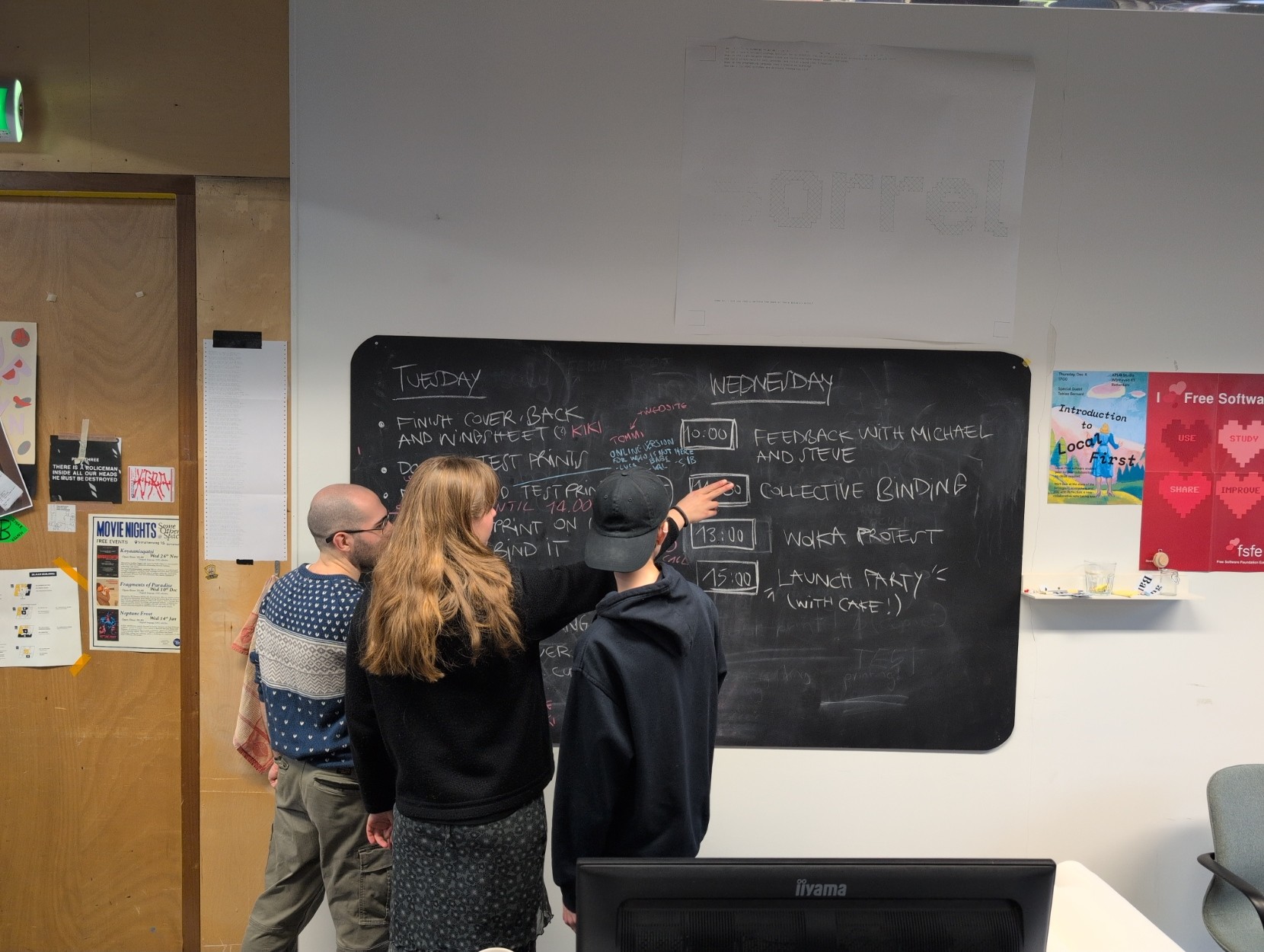

These questions stem from years of questioning and wondering how to integrate my personal note-taking and collective, participatory knowledge management at work, in organisations, institutions, and informal collectives. Recently, I began actively researching this topic as I started playing with the MediaWiki API to cross-synchronise my local Markdown notes and the XPUB wiki, the public learning wiki of the Experimental Publishing master. I am puzzled by taking advantage of the potential of a specific software (in this case, MediaWiki) while fearing of being locked-in.

Some further, more specific, insights and questions:

- Local-first approaches and software (e.g. Reflection)

- Interesting experiments based on existing protocols, such as Ibis

- What do we take of semi-open and obscure yet very cool initiatives like Anytype

- The power and the limits of plain-text: how to enable collaboration on simple Markdown files and build on top of it, as Obsidian does

Cc: @modal @p2panda @obsidian @wikimediaDE @dweb

#knowledge #FreeKnowledge #wiki #MediaWiki #API #Obsidian #Anytype #Ibis #IbisWiki #Reflection #CCC #Federation #federatedKnowledge #docs #PKM #knowledgeManagement #personalKnowledgeManagement #collectiveKnowledgeManagement #DWeb #decentralization #ActivityPub