Repli-scooping some of what I find in a soon-to-be finished paper about correlations and effects between reflective reasoning and philosophical thought experiments across multiple participant samples, the Brauer lab finds

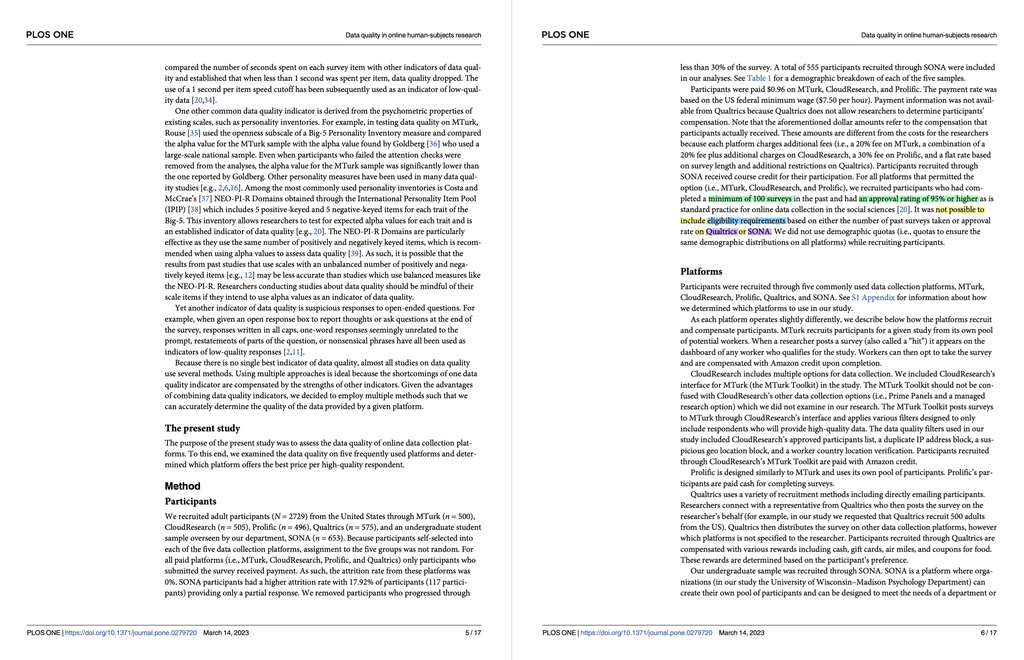

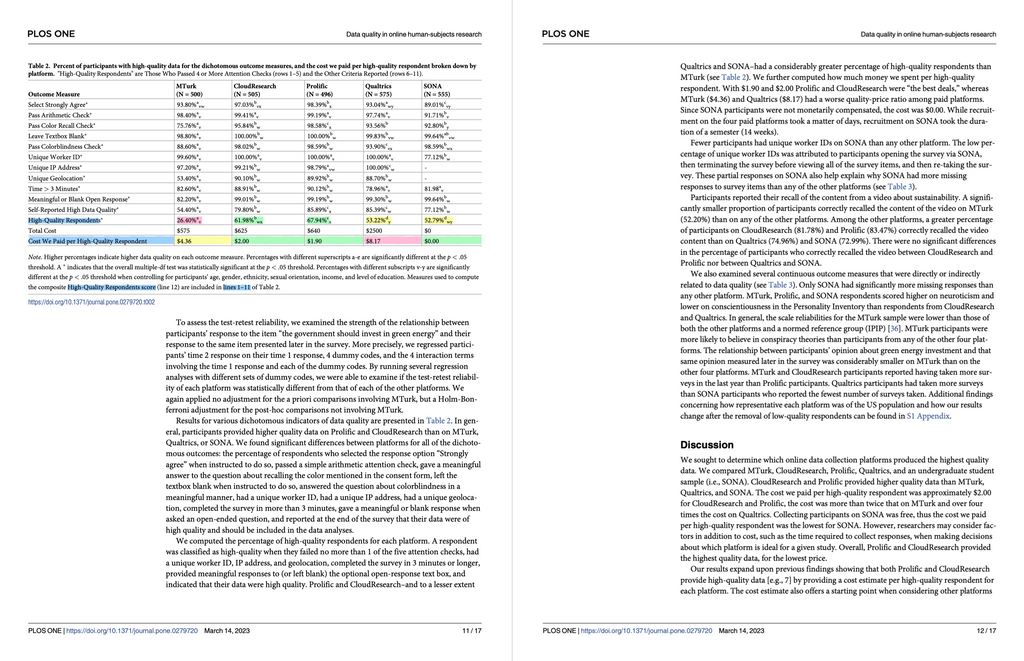

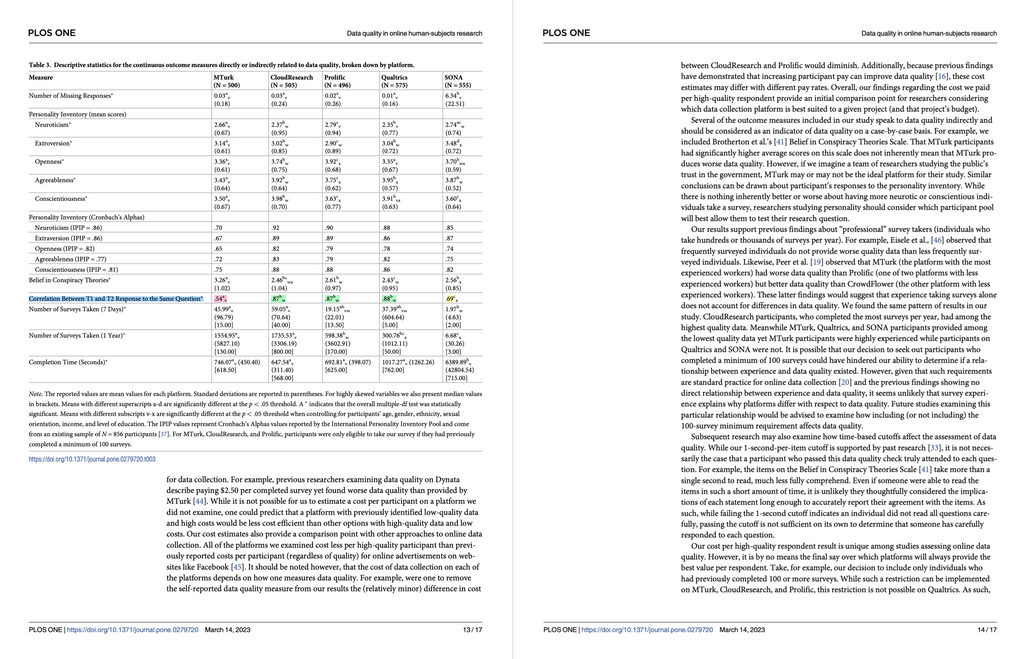

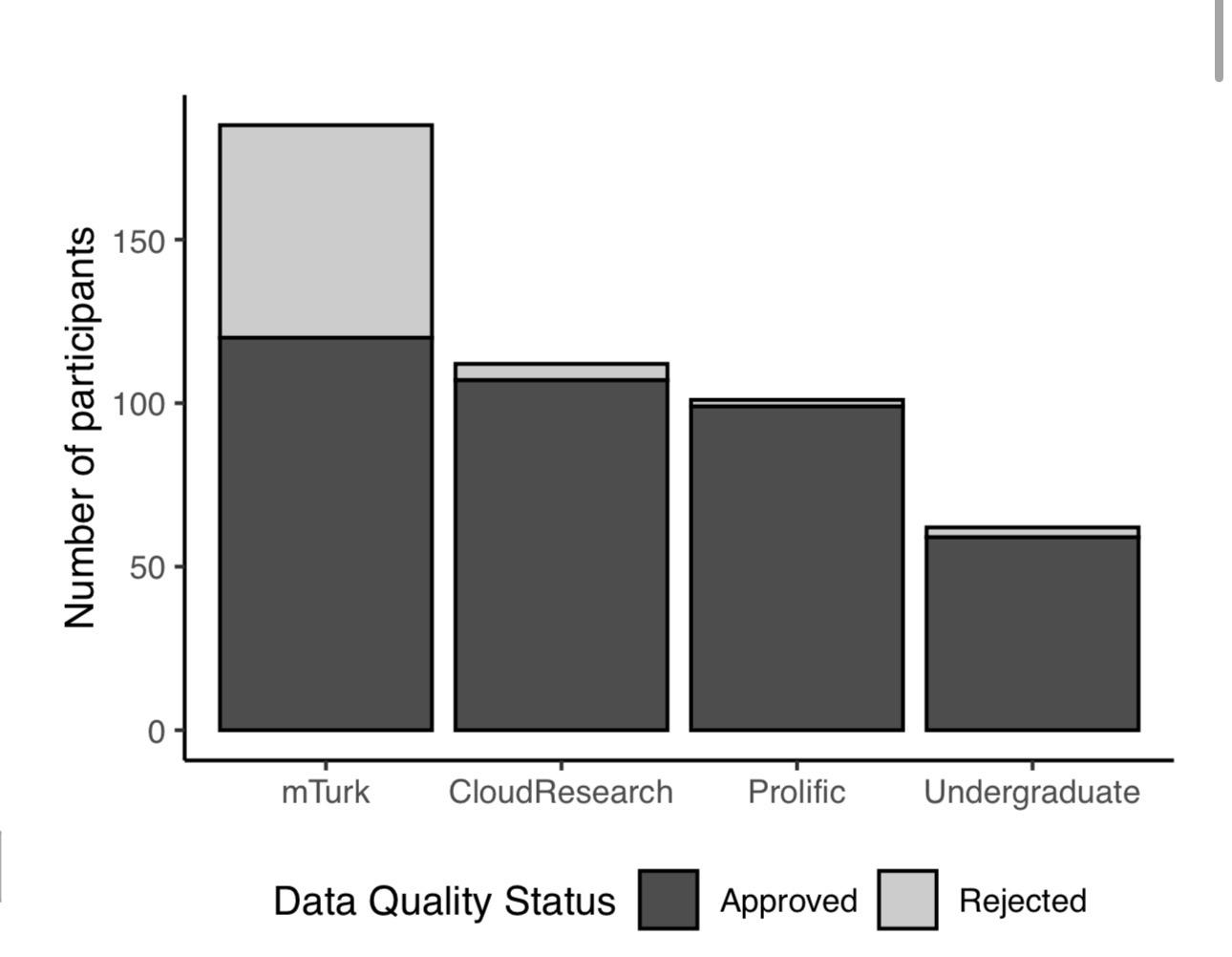

- mTurk workers offered lower quality and lower value data than Prolific workers, students, and even CloudResearch's approved mTurk workers

- Qualtrics panels had the least value but moderate quality

- Students seemed to offer the highest value

Post

I forgot to share the #mTurk data quality result that got scooped:

“In late 2020…. Participants from the United States were recruited from Amazon Mechanical Turk, - #CloudResearch, #Prolific, and a #university. One participant source yielded up to 18 times as many low-quality respondents as the other three.”

https://doi.org/10.1093/analys/anaf015

#psychology #philosophy #surveyMethods #quantMethods #dataScience #qualityControl

Reflection-philosophy order effects and correlations across samples

We've found recruiting people for online #research via #onlineAdvertising yielded good results on overt and covert #dataQuality measures (perhaps because participation incentives aren't financial):

Attention checks passed ≅ 2.6 out of 3

ReCAPTCHA (v3) ≅ 0.94 out of 1.0

Sample size > 5000 (from six continents)

https://doi.org/10.1017/S0034412525000198

#surveyMethods #cogSci #psychology #xPhi #QualityControl #econ #marketing

@ByrdNick interesting. I love the idea of giving participant non-financial incentives in return for their participation/data. Much more of a partnership model. Harder to do, required more thought, but great to see if yields data quality (which is sure to drop only further https://tomstafford.substack.com/p/faking-survey-responses-with-llms )