Some technical updates regarding #MakerTube. I started building up a storage cluster to gain some operational experience with it. We will soon hit some plan limit on Hetzner for the backup (20TB) and the decision was either to use the managed object store (expensive) or do it on my own (more work). I will only use it for backups and block storage for now and see how it turns out. But in the long run I guess there is no way around it to keep costs in check. Let's see! #peertube #ceph

Some technical updates regarding #MakerTube. I started building up a storage cluster to gain some operational experience with it. We will soon hit some plan limit on Hetzner for the backup (20TB) and the decision was either to use the managed object store (expensive) or do it on my own (more work). I will only use it for backups and block storage for now and see how it turns out. But in the long run I guess there is no way around it to keep costs in check. Let's see! #peertube #ceph

If you're still relying on #MinIO, you're certainly aware that after their #OpenSource bait-and-switch, it is now a growing liability and technical debt in your infra.

#Ceph (a rock solid choice!) seems daunting & complex for your use case?

Check out #garage! #S3 storage written in #Rust, all relevant features & sponsored by several funds.

It's what I run in my own labs.

Their commitment to OSS:

https://garagehq.deuxfleurs.fr/blog/2025-commoning-opensource/

If you're still relying on #MinIO, you're certainly aware that after their #OpenSource bait-and-switch, it is now a growing liability and technical debt in your infra.

#Ceph (a rock solid choice!) seems daunting & complex for your use case?

Check out #garage! #S3 storage written in #Rust, all relevant features & sponsored by several funds.

It's what I run in my own labs.

Their commitment to OSS:

https://garagehq.deuxfleurs.fr/blog/2025-commoning-opensource/

Principe de CEPH : chaque fichier confié à CEPH est divisé en objets et chaque objet se retrouve sur plusieurs disques de plusieurs serveurs.

L'interface de CEPH peut être du S3, un système de fichiers accessible comme un partage réseau ou même un "block device".

Retour à la technique avec #CEPH, « une solution de stockage distribué »

J'apprends la syllogomanie. https://fr.wikipedia.org/wiki/Syllogomanie

Parmi les conseils pour les projets libres : Avoir quelqu'un chargé de l'accueil des nouveaux. Créer un rôle d'« UX champion ».

Retour à la technique avec #CEPH, « une solution de stockage distribué »

J'apprends la syllogomanie. https://fr.wikipedia.org/wiki/Syllogomanie

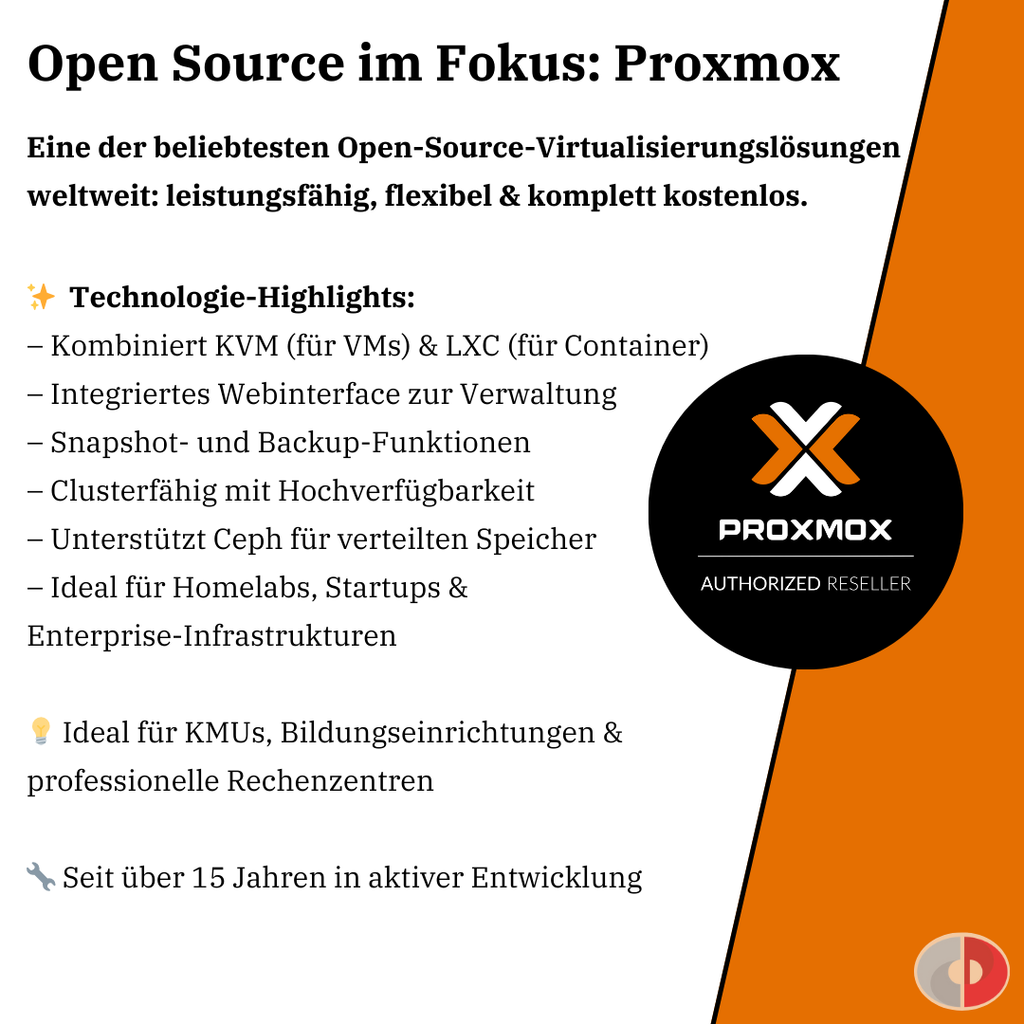

🤜🤛 Sichern Sie Ihre IT mit Proxmox & credativ®: Lizenzierung, Support & Expertise aus einer Hand – maßgeschneidert für Unternehmen:

https://www.credativ.de/portfolio/support/proxmox-virtualisierung/

🤜🤛 Sichern Sie Ihre IT mit Proxmox & credativ®: Lizenzierung, Support & Expertise aus einer Hand – maßgeschneidert für Unternehmen:

https://www.credativ.de/portfolio/support/proxmox-virtualisierung/

Ich bin ja grad ein wenig unentschlossen in Sachen #Ceph...

Derzeit zur Auswahl:

- 6x 4HE mit 36 LFF Einschuebe: SSDs fuer WAL/DB muessen in LFF rein und kosten damit wertvollen Data-Storage

- 4 o. 6x 4HE mit 36 LFF + 24 SFF Einschuebe: charmant, weil sowohl Platz fuer Data als auch WAL/DB und Flash-Data

- 6x 1 HE mit 12x LFF + 4x SFF: hochgerechnet auf 4 HE sind das 48x LFF und 16x SFF, aber auch mehr CPU/RAM/NICs

Meinungen von den Ceph-Kundigen hier?

Ich bin ja grad ein wenig unentschlossen in Sachen #Ceph...

Derzeit zur Auswahl:

- 6x 4HE mit 36 LFF Einschuebe: SSDs fuer WAL/DB muessen in LFF rein und kosten damit wertvollen Data-Storage

- 4 o. 6x 4HE mit 36 LFF + 24 SFF Einschuebe: charmant, weil sowohl Platz fuer Data als auch WAL/DB und Flash-Data

- 6x 1 HE mit 12x LFF + 4x SFF: hochgerechnet auf 4 HE sind das 48x LFF und 16x SFF, aber auch mehr CPU/RAM/NICs

Meinungen von den Ceph-Kundigen hier?

Do I know someone interested in running a 10 PB object storage service (currently #NetApp)?

My employer is hiring for an #SRE position, preferably based in Finland, EU (hybrid work).

https://www.relexsolutions.com/careers/jobs/site-reliability-engineer/?gh_jid=6649500003

Do I know someone interested in running a 10 PB object storage service (currently #NetApp)?

My employer is hiring for an #SRE position, preferably based in Finland, EU (hybrid work).

https://www.relexsolutions.com/careers/jobs/site-reliability-engineer/?gh_jid=6649500003