"The effect of publishing expectations was statistically significant: scholars with higher publishing expectations (HPE) gave lower credibility ratings than scholars with LPE, suggesting a more critical evaluation. However, the effect size was small (ηₚ 2 = 0.014). No significant difference was found between [social science] and [humanities] scholars.

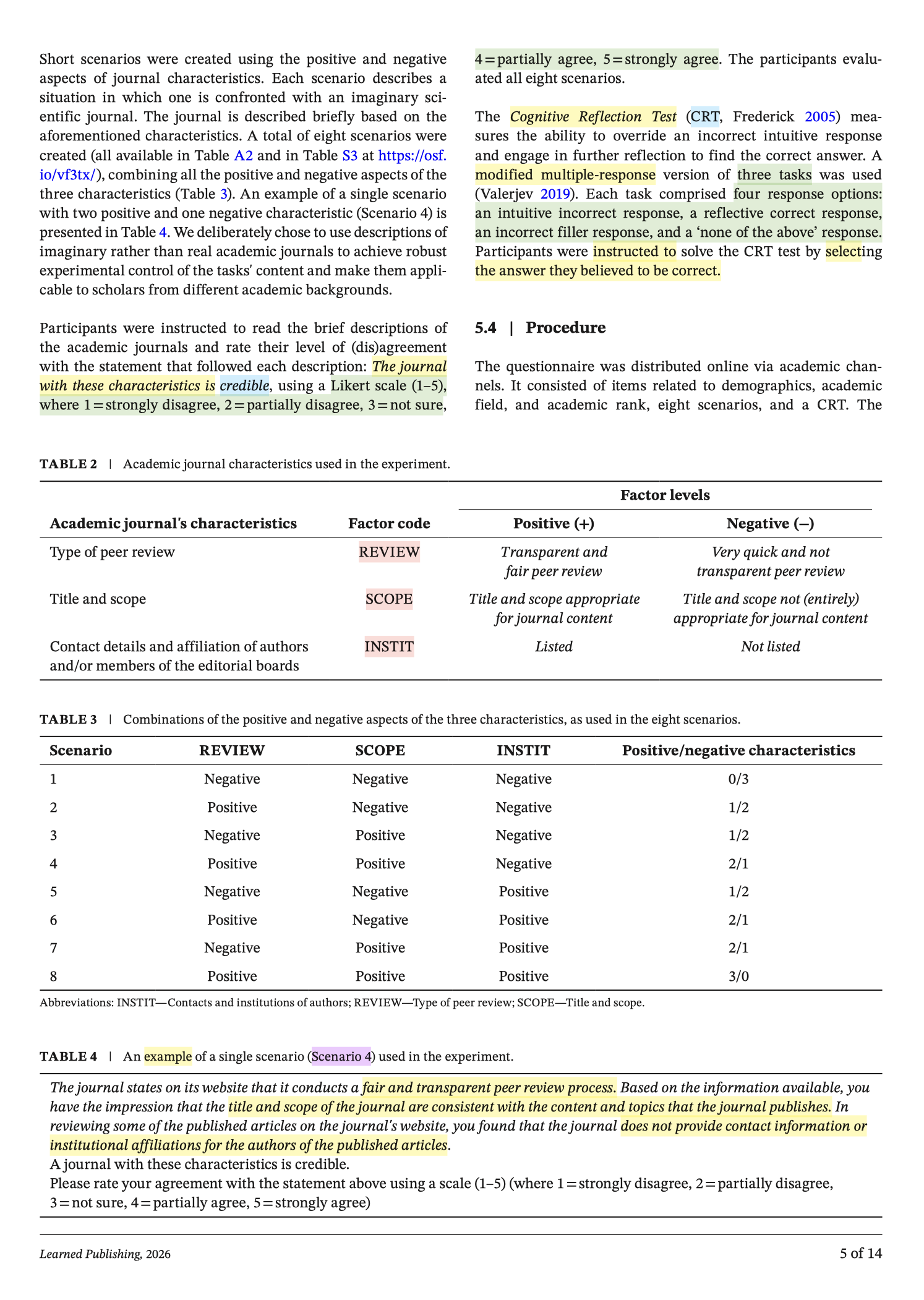

The number of positive characteristics had a large effect on credibility ratings, with a greater number of positive characteristics leading to notably higher credibility ratings. As illustrated in Figure 2 , this increase follows an exponential trend, consistent with the interaction effects shown in Figure 1, where combinations of positive attributes enhance credibility beyond the sum of the individual attributes."

!["The effect of publishing expectations was statistically significant: scholars with higher publishing expectations (HPE) gave lower credibility ratings than scholars with LPE, suggesting a more critical evaluation. However, the effect size was small (ηₚ 2 = 0.014). No significant difference was found between [social science] and [humanities] scholars.

The number of positive characteristics had a large effect on credibility ratings, with a greater number of positive characteristics leading to notably higher credibility ratings. As illustrated in Figure 2 , this increase follows an exponential trend, consistent with the interaction effects shown in Figure 1, where combinations of positive attributes enhance credibility beyond the sum of the individual attributes."](https://nerdculture.de/system/media_attachments/files/116/136/824/163/994/756/original/ad9b1d3da27ba313.png)

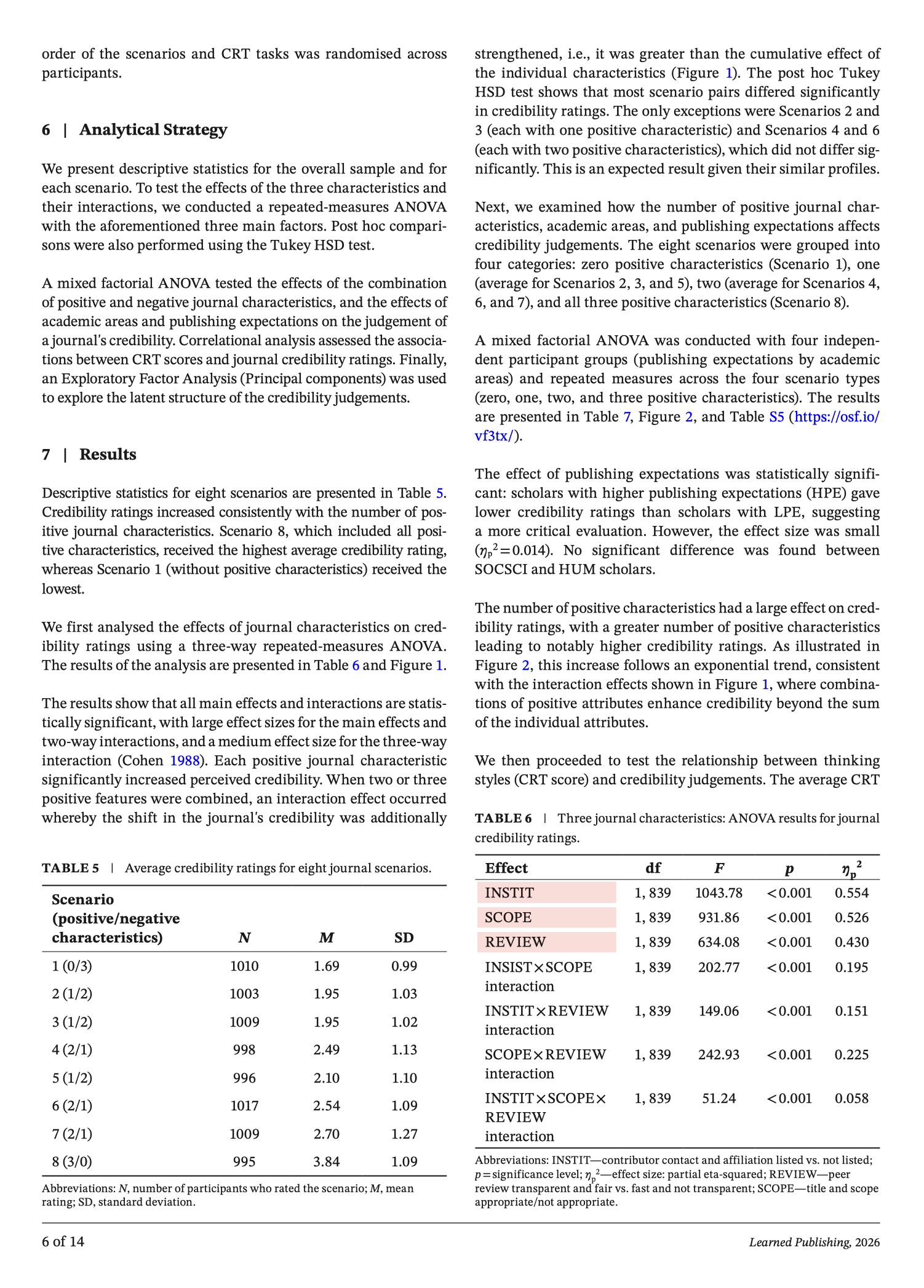

!["The correlation between [reflection test] scores and the average rating across all scenarios was negative and low, but statistically significant (Table 8), suggesting that more reflective participants tended to rate journals more critically. Specifically, a significant negative correlation was obtained for journals with zero or one positive journal characteristics and at the same time with three or two negative attributes. However, the ratings of journals with two and three positive attributes did not correlate with the [reflection test]."

"Exploratory Factor Analysis ...yielded a two-factor solution accounting for 59.39% of the total variance. Component 1 (eigenvalue of 3.593) explained 44.91% of the variance, whilst Component 2 (eigenvalue of 1.158) explained 14.48%. The structure matrix (see Table 9) shows that Component 1 included low-quality journals, and and Component 2 included high-quality journals. Journals with mixed characteristics loaded on both components"](https://nerdculture.de/system/media_attachments/files/116/136/824/164/166/437/original/e906536c89d4a62d.png)