「 Marriage has become a fantasy. Instead of bowing to their ancestors, they pray to NVIDIA GPUs and beg a graphics card for salvation. This is no longer technology. It is a sad religion 」

「 Marriage has become a fantasy. Instead of bowing to their ancestors, they pray to NVIDIA GPUs and beg a graphics card for salvation. This is no longer technology. It is a sad religion 」

RE: https://social.heise.de/@heiseonline/116135908556069707

Ich nenne das entwaffnende Ehrlichkeit. Also fuer den Status Quo der Modelle, deren CEOs uns eine #AGI fuer dieses Jahrzehnt ankuendigen.

When someone tells you that AGI is inevitable and the permanent economic displacement of most humans is a foregone conclusion, what they're really telling you is that they believe the current leaders of the AI industry will execute flawlessly, indefinitely, against challenges they can't yet foresee, in an environment that's changing faster than perhaps any in history.

This seems unlikely.

> The A in AGI stands for Ads! It's all ads!! Ads that you can't even block because they are BAKED into the streamed probabilistic word selector purposefully skewed to output the highest bidder's marketing copy.

Ossama Chaib, "The A in AGI stands for Ads"

This is another huge reason I refuse to "build skills" around LLMs. The models everyone points to as being worthwhile are either not public or prohibitively expensive to run locally, so incorporating them into my workflow means I'd be making my core thought processes very vulnerable to enshittification.

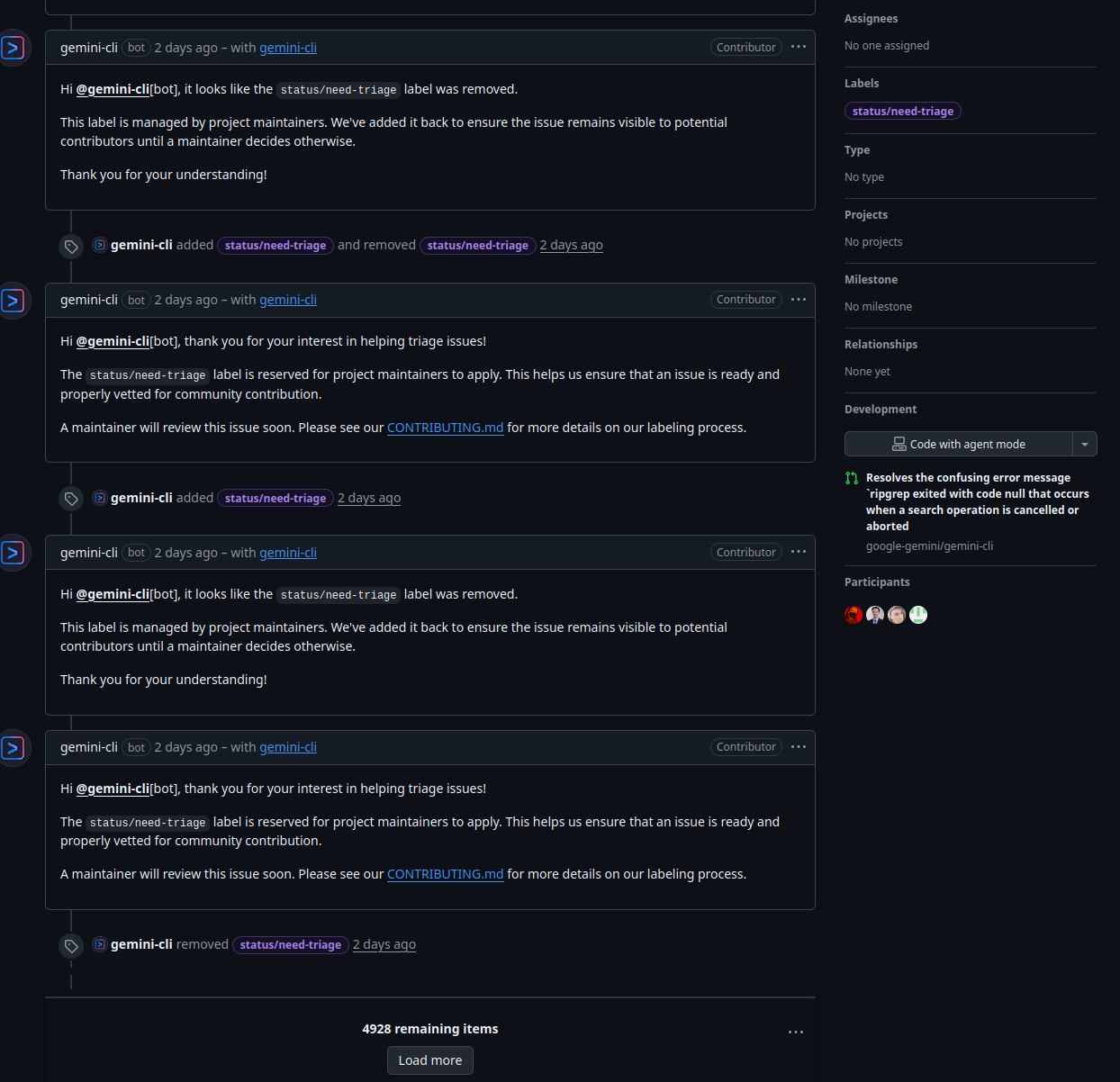

Good things happening at the Gemini github

https://github.com/google-gemini/gemini-cli/issues/16725

#ai #github #gemini #agi #google

Since I'm job and work hunting I tend to see the absurd new job titles that are bouncing around in the tech sector. The latest, which I've seen twice today, is "artificial general intelligence engineer" or some permutation thereof. I do my best to spend the minimum possible time on these and have no guess about whether they're legitimate.

#AI #GenAI #GenerativeAI #LLM #AGI

> The A in AGI stands for Ads! It's all ads!! Ads that you can't even block because they are BAKED into the streamed probabilistic word selector purposefully skewed to output the highest bidder's marketing copy.

Ossama Chaib, "The A in AGI stands for Ads"

This is another huge reason I refuse to "build skills" around LLMs. The models everyone points to as being worthwhile are either not public or prohibitively expensive to run locally, so incorporating them into my workflow means I'd be making my core thought processes very vulnerable to enshittification.

The A in AGI Stands for Ads

Good things happening at the Gemini github

https://github.com/google-gemini/gemini-cli/issues/16725

#ai #github #gemini #agi #google

I've posted an essay on 'The Road to Artificial General Intelligence'. It's opinion, and it essentially just summarises a lot of things I've posted here anyway, but it puts them into a single coherent piece.

Designing machine minds requires a level of epistemological understanding. What makes good training data? What is data quality?

It's not enough to learn old philosophical books about epistemology, because those aren't in themselves the core truth of the topic. Making machine minds shows us what knowledge actually is, and what data quality actually is, in ways which weren't accessible to past philosophers. We are doing applied epistemology in AI.

Saying for example that AI slop content in the internet is going to lead to model collapse is an inherently epistemological claim, and certainly true for naive data pipelines which aren't designed with understanding.

So, I have my own ideas of epistemology which support my views and ideas on how to push the envelope in AI further. For example:

- Intelligence is competence in an open set of ridiculously transferrable cognitive skills. Nothing more, nothing less.

- Data quality relates to how the data can be utilized in training intelligent models. Hence good quality data, regardless of domain or modality, has a good coverage of high quality knowledge and traces of competent skills use.

- Natural data is not the gold standard, and neither is human-imitative data. We can refine data by different means, human or machine. Wikipedia is in itself an example of a refined data repository, refined by humans from source data of lower quality.

- High quality data can be refined from lower quality data, as long as the truth and the skills are in the data, the raw digital ore. The ore containing what we want to refine and extract can be sourced from different trial-and-error evolutionary processes, real world measurements and experiments, or by applying compute to produce new knowledge and skills from knowledge and skills already there.

It is not possible to design the next generation of machine minds without at least implicitly basing it on some epistemological insights. Doing otherwise is just struggling blindly in the dark, to only succeed by chance.

@jeffjarvis

Bingo! Way to go! 🤡

If we focus on speculative issues about something we don't have ( #AGI ) it looks like our unsolved problems today ( #ai #injections , #agents , #aisafety ) are actually solved. 👏

Anyone interested in research about predicability of solutions of the #travelingsalesmanproblem in context of #multidimension #timetravel ? 🤔

They keep switching the rabbits running before the dogs on the AGI track. Now the doomers are worried not about one model reaching AGI but about agents from multiple models getting superintelligent.

Distributional AGI Safety

https://arxiv.org/pdf/2512.16856

@jeffjarvis

Bingo! Way to go! 🤡

If we focus on speculative issues about something we don't have ( #AGI ) it looks like our unsolved problems today ( #ai #injections , #agents , #aisafety ) are actually solved. 👏

Anyone interested in research about predicability of solutions of the #travelingsalesmanproblem in context of #multidimension #timetravel ? 🤔

Remember #people..

Together we can change this future. Turn things around, and twist them right again. Just by bringing our alternative intelligence to the table.. our own #AGI:

Abundantly available our #human wit and spirit, boundless #creativitity, and above all our ability to play. It requires some #playfulness and a whiff of #scifi and #fantasy.. Just enough to #imagine different worlds, and #dreamwalk towards their 👁️realization.

https://discuss.coding.social/t/sci-fi-labs-federated-worldbuilding/95

💃🕺 Happy #cocreation in 🍒 2026 !!

Remember #people..

Together we can change this future. Turn things around, and twist them right again. Just by bringing our alternative intelligence to the table.. our own #AGI:

Abundantly available our #human wit and spirit, boundless #creativitity, and above all our ability to play. It requires some #playfulness and a whiff of #scifi and #fantasy.. Just enough to #imagine different worlds, and #dreamwalk towards their 👁️realization.

https://discuss.coding.social/t/sci-fi-labs-federated-worldbuilding/95

💃🕺 Happy #cocreation in 🍒 2026 !!

#introduction I am new here. My brother @jaforbes tells me it is customary to do an opening post of things I find interesting or likely to discuss in the future. Here is my list: #democracy #ai #eng #engineering #osint #geopol #math #mathematics #humanrights #compsci #history #philosophy #space #australia #japan #bluemountains #science #tech #anu #unsw #uts #physics #uai #agi #nafo #peace #ukraine #taiwan #photography #tdd #andor. I have friends everywhere. I hope that you'll join me.

#introduction I am new here. My brother @jaforbes tells me it is customary to do an opening post of things I find interesting or likely to discuss in the future. Here is my list: #democracy #ai #eng #engineering #osint #geopol #math #mathematics #humanrights #compsci #history #philosophy #space #australia #japan #bluemountains #science #tech #anu #unsw #uts #physics #uai #agi #nafo #peace #ukraine #taiwan #photography #tdd #andor. I have friends everywhere. I hope that you'll join me.