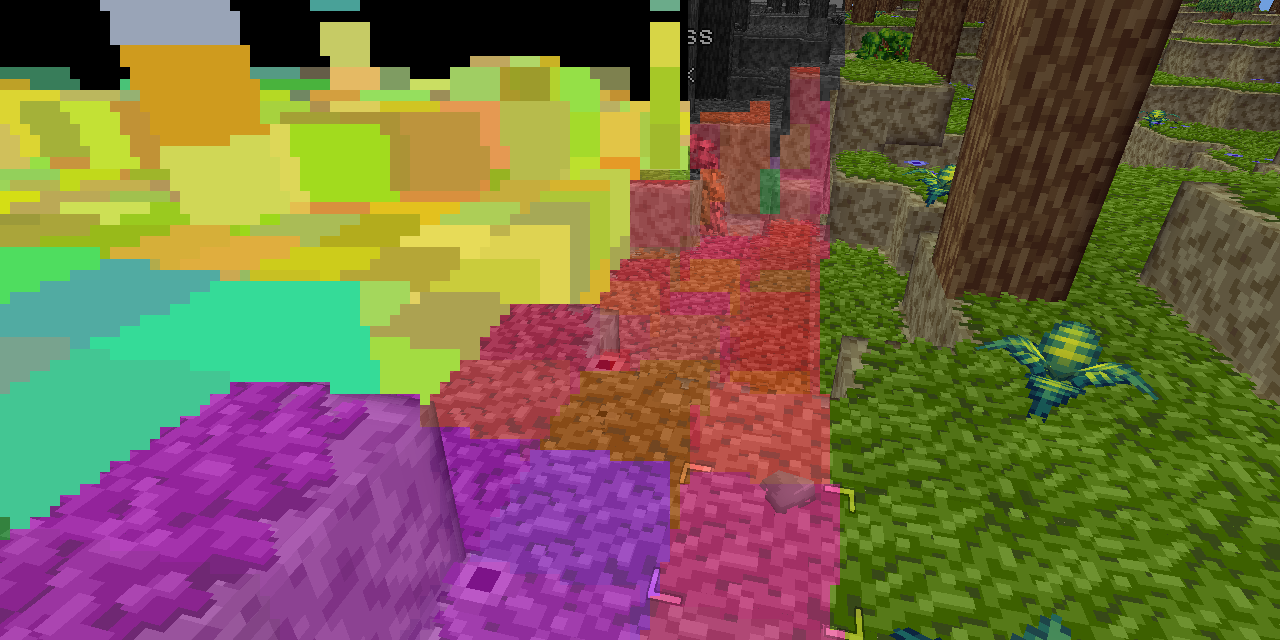

My blog post about how software rendered depth based occlusion culling in Block Game functions is out now!

https://enikofox.com/posts/software-rendered-occlusion-culling-in-block-game/

Note! Any public or quiet public/unlisted replies to this toot will be shown as comments on the blog. If you don't want that please use followers only or private mention when replying