Over de recente aanvallen op mastodon.nl

De afgelopen weken is er een aantal momenten geweest dat mastodon.nl minder goed bereikbaar was. Zowel in januari als begin februari raakten onze servers overbelast door enorme aantallen geautomatiseerde ‘bezoekers’. Het is een type aanval dat we nog niet eerder hebben waargenomen en waar we nog geen definitief antwoord op hebben. Hieronder leggen we uit wat er technisch gebeurde. Ben je hier geïnteresseerd in, en kun je technisch meedenken, dan nodigen we je van harte uit verder te lezen en van je te laten horen als je ideeën of reacties hebt. We willen vooral benadrukken dat we er alles aan doen om mastodon.nl bereikbaar te houden, maar dat we in dit geval een nogal vreemde puzzel aan het oplossen zijn. We vragen daarvoor jullie geduld en begrip, mochten onze servers toch weer overbelast raken. We werken eraan!

Wat gebeurde er?

Op 2 februari kregen we een melding van een van de moderatoren dat er niet ingelogd kon worden op mastodon.nl

Een van de beheerders keek naar de monitors en concludeerde dat er weer een aanval gaande was.

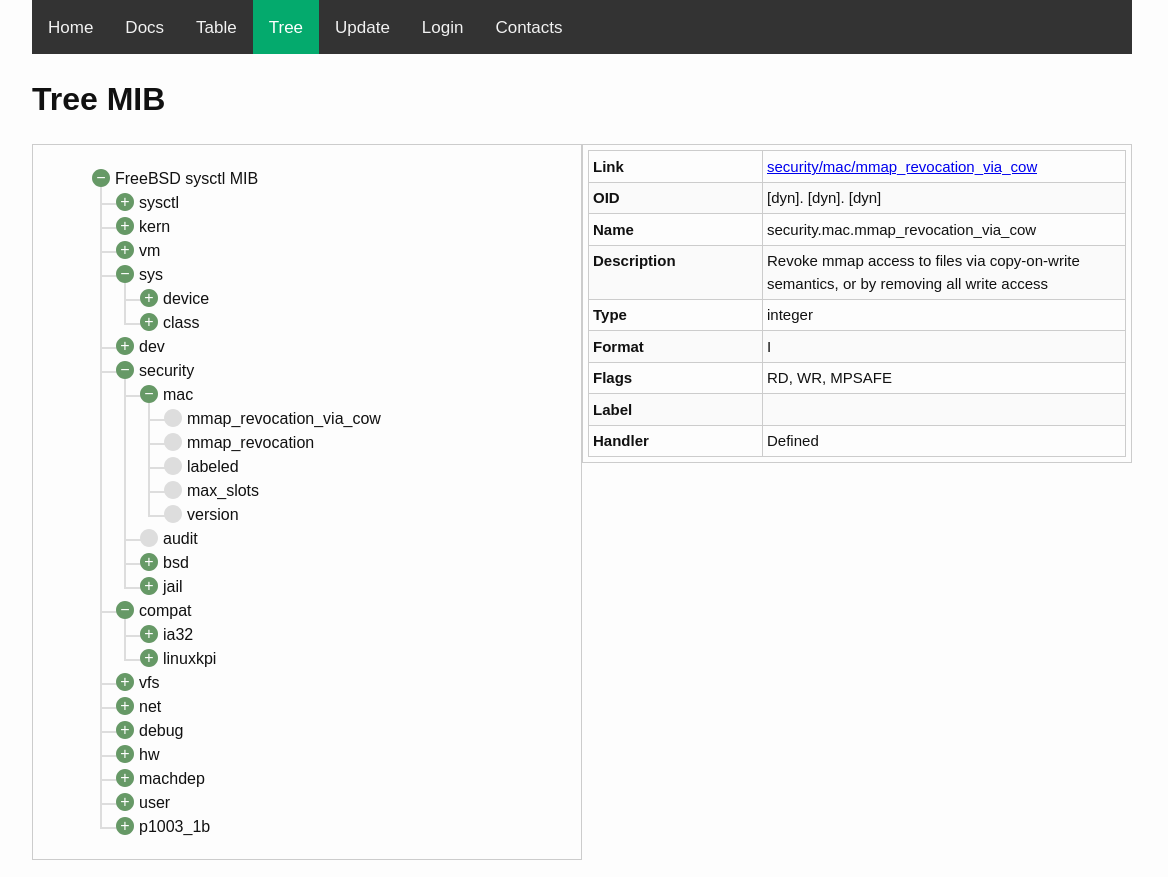

De activiteit op de server, met van boven naar beneden de Web Worker Utilisation %, Requests per second, Web Load5/CPU Count.

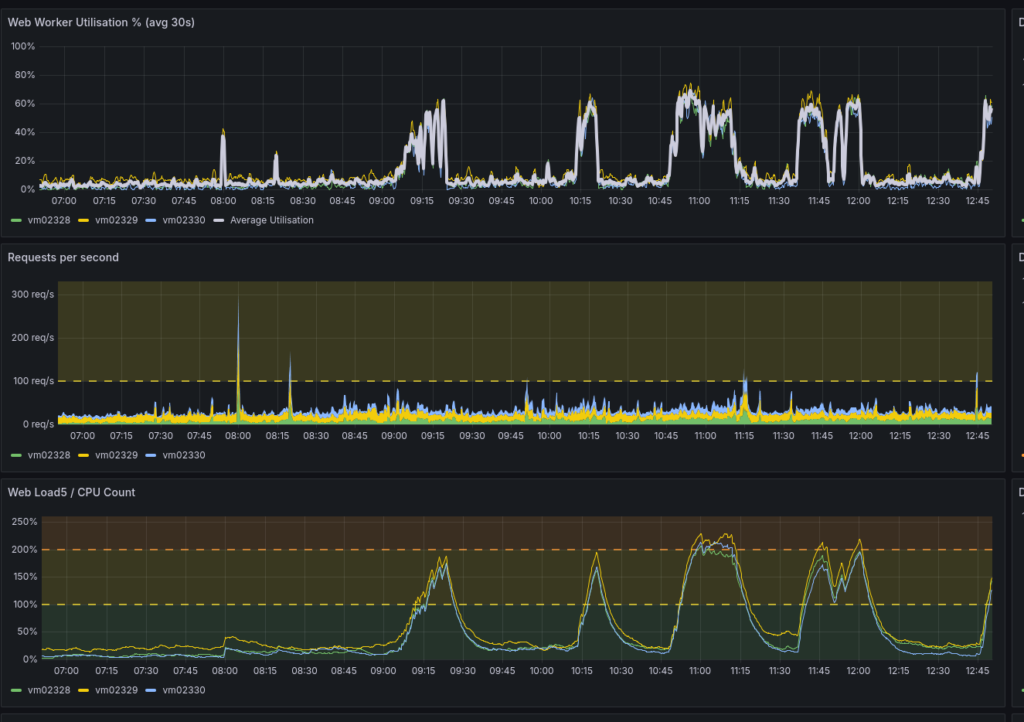

De activiteit op de server, met van boven naar beneden de Web Worker Utilisation %, Requests per second, Web Load5/CPU Count. Screenshot van een deel van de logfiles.

Screenshot van een deel van de logfiles.De bijbehorende logfiles zagen er zoals in de bovenstaande afbeelding uit. Alsof er een verkeerde parser wordt gebruikt, iets dat een src-set probeert te lezen, op een verkeerde manier.

Het patroon: elke 45 minuten was er een 30 minuten durende aanval die daarna weer stilviel.

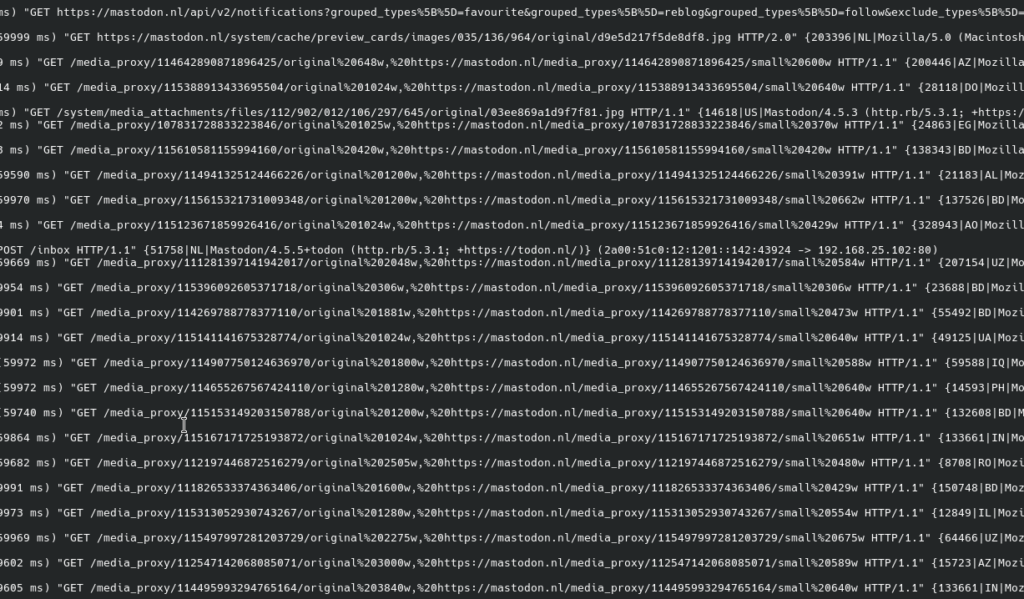

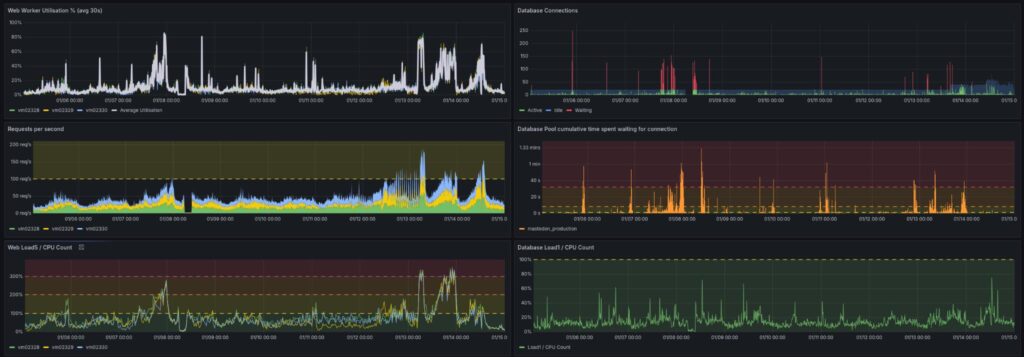

Zes grafieken van de serveractiviteit tijdens de aanval in februari. Links van boven naar beneden: Web Worker Utilisation %, Requests per second, Web Load5/CPU Count. Rechts van boven naar beneden: Database Connection, Database Pool cumulative time spent waiting for connection, Database Load1/CPU Count.

Zes grafieken van de serveractiviteit tijdens de aanval in februari. Links van boven naar beneden: Web Worker Utilisation %, Requests per second, Web Load5/CPU Count. Rechts van boven naar beneden: Database Connection, Database Pool cumulative time spent waiting for connection, Database Load1/CPU Count.Tijdens deze momenten werd de server zo lang bezig gehouden dat deze niet meer goed toegankelijk was. In bovenstaande plaatjes kun je dat zien:

- De “Web Worker Utilisation %”, vertoont pieken van deze momenten;

- De “Web Load5 / CPU Count” representeert voor hoeveel procent de servers belast zijn; alles boven 100% betekent dat de server geen tijd heeft om aan alle aanvragen toe te komen;

- De “Database Pool cumulative time spent waiting for connection” is ook cruciaal; het laat zien hoeveel aanvragen die de database betreffen in de wachtrij moeten staan. (die wachtrij is een mechanisme om ervoor te zorgen dat de database-server niet overbelast raakt);

- “Database Connections” is daarbij ook interessant: bij rode pieken zijn er verbindingen die in de wachtrij staan.

Dit gebeurde ook in januari

Van eenzelfde aanval hadden we in januari ook al last, tussen ongeveer 5 en 15 januari.

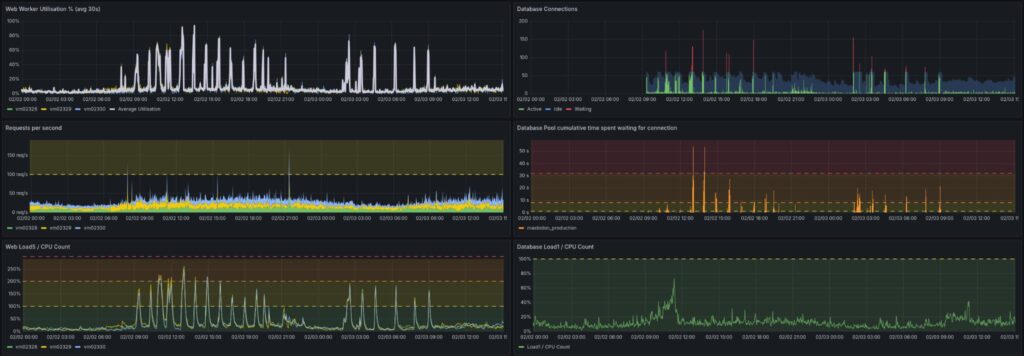

Over een normale periode worden er ongeveer 25 tot 50 aanvragen per seconde gestuurd (door gebruikers, en door andere servers). In deze periode werden er echter 50 tot 100 extra aanvragen per seconde gemaakt door niet-gebruikers. Op de grafieken hieronder zie je dat vooral 13 en 14 januari de server het meest instabiel was.

Zes grafieken van de serveractiviteit tijdens de aanval in januari. Links van boven naar beneden: Web Worker Utilisation %, Requests per second, Web Load5/CPU Count. Rechts van boven naar beneden: Database Connection, Database Pool cumulative time spent waiting for connection, Database Load1/CPU Count.

Zes grafieken van de serveractiviteit tijdens de aanval in januari. Links van boven naar beneden: Web Worker Utilisation %, Requests per second, Web Load5/CPU Count. Rechts van boven naar beneden: Database Connection, Database Pool cumulative time spent waiting for connection, Database Load1/CPU Count.Het profiel van de niet-gebruikers:

- Ze doen hun aanvragen met user-agent windows 10, en meerdere (recente) versies van google chrome;

- Ze vragen resources aan, in grote getale, die door een normale browser alleen maar worden aangevraagd als die browser mastodon.nl voor het eerst opent;

- De aanvragen komen voor het overgrote merendeel van buiten Europa. Elke vraag is van een verschillend IP adres afkomstig, waarbij ieder IP adres maar 2 of 3 aanvragen verstuurd over een minuut;

- Het enige dat de aanvragen overeenkomstig hebben is dat ze allemaal van een paar ASNs komen, en die ASNs zijn altijd tussen- of transit-netwerken, buiten Europa, waar je absoluut geen normale gebruikers van (kan) verwacht(en);

- Voor het verdere gedrag lijken deze aanvragen/browsers normale dingen als posts en gebruikers-accounts te laden;

- Het patroon waarmee de aanvragen komen oogt niet natuurlijk; over de 3 dagen waar aanvragen werden gedaan, werd het aantal langzaam opgeschroefd over de dag heen, en stopte het tegen middernacht of op het moment dat aanvragen belemmerd werden (door ze te blokkeren, of doordat de server niet meer bereikbaar was).

Kortom, deze aanval preventief tegengaan is heel lastig, omdat het gedrag precies lijkt op een plausibele browser aanvraag: Chrome van meerdere versies op Windows 10.

De oplossing voor nu

Eerst richtten we ons op het blokkeren van ASN’s. Daarvan hebben we er nu ongeveer 360 geblokkeerd, omdat die herhalende golven van aanvragen deden die enorm veel rekentijd kostten. Er waren echter 1000 andere ASN’s die hetzelfde gedrag vertoonden. Nu zijn we overgestapt op een specifieke high-impact fingerprint die we hebben kunnen identificeren:

- Aanvragen voor ge-proxy’de media die verkeerd geformatteerd zijn, en dan op een manier die een normale browser nooit zou vragen.

Wie of wat veroorzaakt dit?

Hier hebben we nog geen antwoord op. Recent is bijvoorbeeld bekend geworden dat AI-bots van Perplexity crawling activiteiten proberen te verbergen, onder andere door hun ASN telkens te wijzigen. AI crawlers leiden dan ook op veel plekken tot overbelasting van servers.

Wat we ook hebben gezien is dat SpaceX, d.wz. Starlink, als route wordt gebruikt. Het is dus mogelijk dat dit (ook) een (residential) botnet is, en niet (alleen) een crawler.

Of AI crawlers ook de oorzaak zijn van de aanvallen op mastodon.nl is nog speculeren. Mocht je dit lezen en het patroon van de aanval herkennen, laat het ons weten. Voor nu draait alles weer stabiel, maar we verwachten dat dit gedrag een terugkerend probleem is en we zoeken nog naar een definitieve oplossing.

+

+