“At his peak, Burt, who has been in the voiceover industry for 15 years, says he was bringing in six figures a year. ‘I never thought I’d get to that point as an artist or actor, so I was crazy-proud,” he says. “Now I sell storage to pay my rent.’ While Burt says people have been talking about the threat of #AI for at least the past four years, he says it wasn’t until 2024 that things started declining rapidly.

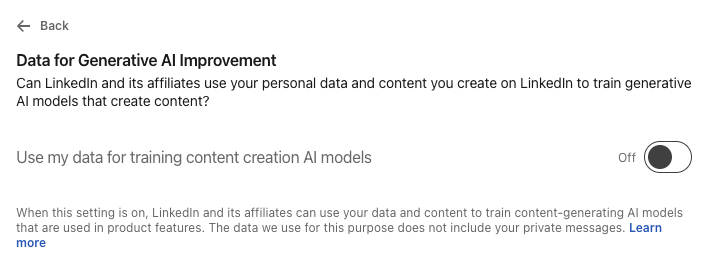

“That was when I started seeing more and more #AITraining #jobs out there,” he says. “They were getting #VoiceActors to participate in their own demise.”

“The real visceral moment, that kick in the pants, was my voice being cloned,” he says. “A previous client took recordings that we completed together, cancelled the contract and fed those recordings into an #AIModel.”

The same year, Burt lost 40 per cent of his annual turnover. Now, he says it is down 90 per cent.”

#ZeroHourWork / #WhiteCollar / #Arts <https://www.smh.com.au/business/workplace/this-voiceover-actor-was-dumped-from-his-contract-his-voice-was-cloned-20260213-p5o230.html> / <https://archive.md/A5ZVa>