(This is a job to come work on #sciop)

https://digipres.club/@mickylindlar/116025881444877358

(This is a job to come work on #sciop)

https://digipres.club/@mickylindlar/116025881444877358

Have had to work on some other things lately, but returning to #sciop and wrote a blog post about current status and our plans for federation - it's about that time. Just need to do one more feature (commenting) and finish up some work for main job responsibilities, and it's off to implementing distributed activitypub where we decouple actors from instances

https://blog.sciop.net/2025-12-08/sfn-and-federation

Have had to work on some other things lately, but returning to #sciop and wrote a blog post about current status and our plans for federation - it's about that time. Just need to do one more feature (commenting) and finish up some work for main job responsibilities, and it's off to implementing distributed activitypub where we decouple actors from instances

https://blog.sciop.net/2025-12-08/sfn-and-federation

Here's another #FEP for representing torrents on activitypub :)

short, sweet, and with a reference implementation and tests!

towards a federated bittorrent tracker with #sciop !

PR: https://codeberg.org/fediverse/fep/pulls/714

Discussion: https://socialhub.activitypub.rocks/t/fep-d8c8-bittorrent-torrent-objects/8309 (or this thread)

#FEP_d8c8 #BitTorrentOverActivityPub #FederatedP2P #BitTorrent

Here's another #FEP for representing torrents on activitypub :)

short, sweet, and with a reference implementation and tests!

towards a federated bittorrent tracker with #sciop !

PR: https://codeberg.org/fediverse/fep/pulls/714

Discussion: https://socialhub.activitypub.rocks/t/fep-d8c8-bittorrent-torrent-objects/8309 (or this thread)

#FEP_d8c8 #BitTorrentOverActivityPub #FederatedP2P #BitTorrent

Id like to put my lab servers to work archiving US federal data thats likely to get pulled - climate and biomed data seems mostly likely. The most obvious strategy to me seems like setting up mirror torrents on academictorrents. Anyone compiling a list of at-risk data yet?

edit (2025-09-21): this became https://sciop.net and its still going, in case anyone in this thread missed it

edit (2025-09-21 pt 2): do note the date on original post, nearly a year old, we have been rolling on sciop since February or so

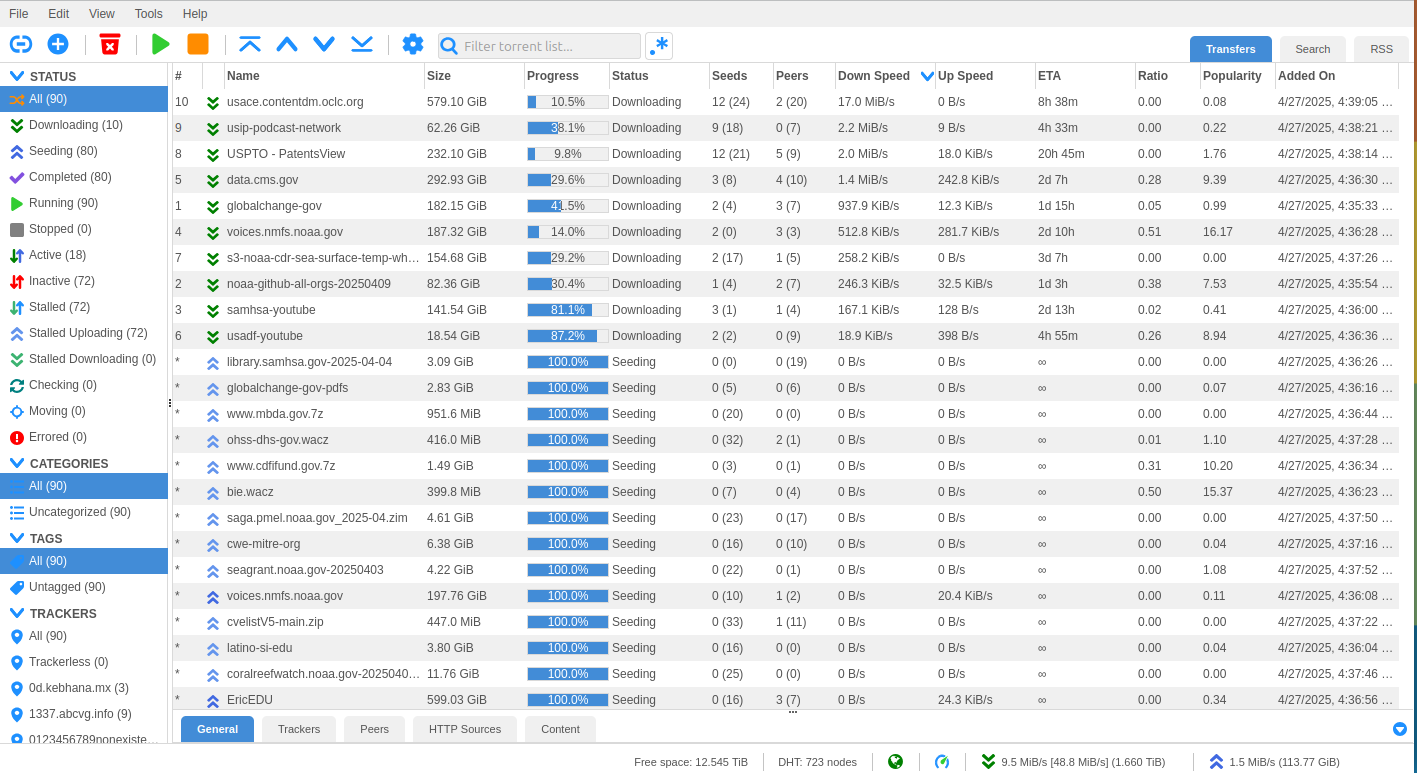

I joined the #safeguardingresearch #sciop torrent swarm to keep datasets from USA science institutions easily available.

Setup a qbittorrent Docker https://docs.linuxserver.io/images/docker-qbittorrent/

Added dataset feeds by threat level (I went with extinct, takedown_issued and endangered) https://sciop.net/feeds

Done

Peer to peer archives are real and they work, period. 216TiB of threatened cultural, climate, queer, and historical information held in common. That's a people powered archive, and you're welcome in it - to take from, to add to, and help sustain if you can.