@huntingdon AI will be a drug that we will be addicted to.

@samiamsam No, actually. This isn't in an issue of Garbage In Garbage Out -- even if the source documents are good ones, the synthetic text extruding machines make papier-mâché out of them and furthermore strip context.

But an interesting question for you: Why did you think I needed the concept of GIGO pointed out here?

@emilymbender it's probably more important to educate people about how AI works so they can be reasonable about fact checking, but then that will probably lead to people not being dependent on AI and big tech won't make any money from it

@emilymbender it demonstrates how even google doesn't want to deal with their own shit: the fact that they're trying to BLOCK it instead of fixing it shows how little fucks they give to it. They know it's shit and how temporary it is, and they're just prolonging the inevitable collapse of it to make even more money while it still lives.

@emilymbender That Guardian headline is itself misleading; it should say something like, "Google's false and inaccurate AI overviews continue to put users health at risk."

@emilymbender I play a game now and then with the latest and greatest LLMs to see how easy it is to get them to make me a recipe which includes something grossly poisonous. They still fail badly.

@emilymbender It's fine they have n-dimensional guardrails 🤡

@emilymbender The theme to I DREAM OF JEANIE is now playing in your head!

It's outa that bottle!

@emilymbender The developers do not know what answer an AI will give to specific prompt in advance. It's a black box of answers. Therefore there is no QA of the product. It is unpredictable and therefore dangerous. Need I continue?

@emilymbender only if you see the problem as 'this could hurt or mislead people' is this a fundamental flaw.

If you see the problem as 'now that we've been openly notified, we could be sued for this particular issue', then a patch is exactly the solution. The product works* fine

*'works', verb present tense, pronounced 'attracts investors'.

@emilymbender I just wanna say, I'm thrilled that rugbies are back. I always loved rugbies.

@emilymbender If the approach to mking AI return good results is to put in a bunch of special cases, can we just go back to writing proper expert systems?

"Block that search in particular" is also what Google did when the inherent racism in their image search algorithm was pointed out, in 2018.

https://www.theguardian.com/technology/2018/jan/12/google-racism-ban-gorilla-black-people

>>

And when Dr.

@safiyanoble pointed out the racist results returned for queries like "black girls" in ~2016. See her amazing book Algorithms of Oppression:

https://nyupress.org/9781479837243/algorithms-of-oppression/

>>

Back to the current one, the quotes from Google in the Guardian piece are so disingenuous:

>>

@emilymbender Good News! We told our AI to audit the results of our AI and it told us it passed!

Measuring and reviewing the quality of its summaries? How does a great AI house do that?

Unleashing on AI instance on another, and letting them "duke it out"?

I think I like it.

I've been shouting about this since Google first floated the idea of LLMs as a replacement for search in 2021. Synthetic text is not a suitable tool for information access!

https://buttondown.com/maiht3k/archive/information-literacy-and-chatbots-as-search/

/fin (for now)

@emilymbender Do you know when you watch a movie and the caricature evil investor says something like "¿Lifeboats? ¡Nonsense! This ship was advertised to be unsinkable, and therefore that would be bad for our brand and a useless expense."?

For some reason, this feels like the same problem. They have already decided that the final destination must be a computer that talks like a human servant, and any evidence that natural conversation is not a good machine interface will be ignored.

@emilymbender The world cries out for a better search. One that can work even on an Internet full of malicious SEO engineered to generate false positives for fake reviews and other scam sites. Such a technology is desperately needed. Unfortunately we got LLMs instead, which exchange one set of problems for another: They can only return information that is true most of the time.

@emilymbender Right now I’m finding that when I have a conversation with chatGPT about a topic it’s a much better and more accurate and useful experience than using Google or DuckDuckGo searches. It includes links to sources but it also has a useful wider context that informs where it looks (like whether I’m looking for UK or USA based info), and is persistent, so I can come back to the topic months later and continue.

@emilymbender For example, here’s a conversation about what species of bat I’m looking at. It’s a much better experience than web search. Regardless of how accurate it is, the experience is going to drive usage. However it asked good clarifying questions and the answers are correct as far as I can tell. https://chatgpt.com/share/6964daa3-4a64-8009-86e9-4a1b804998a7

@emilymbender

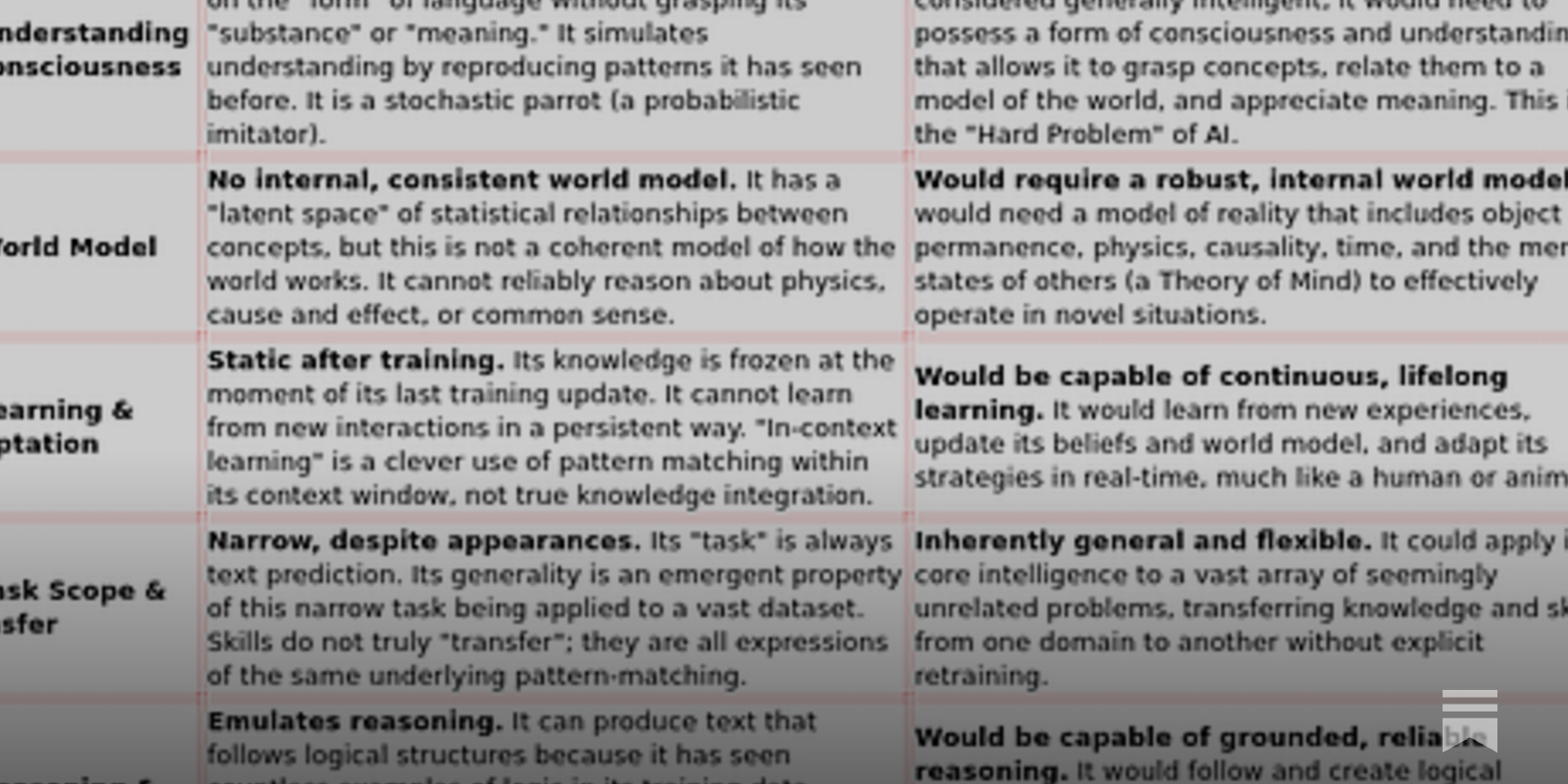

The con is to allow the user to imagine that the chatbot is AGI and not LLM. Once it is clear what the LLM is, then it is useful for finding normative language related to any number of topics, has been my experience. The AI market capitalization is criticized as a bubble. I think it is, too. I think that the misunderstanding regarding the chatbot will bite. I discussed this matter with DeepSeek: https://johntinker.substack.com/p/misunderstanding-as-a-commutator

@johntinker See pinned toot:

https://dair-community.social/@emilymbender/109339391065534153

Also, no, LLMs are not what you think they are, if you are "discussing" anything with them.

@emilymbender In Google's defense, they have been so good at making their search engine pure shit, "AI" search just might be better.

@emilymbender In Google's defense, that have been so good at making their search engine pure shit, "AI" search just might be better.

People should use search options that either have no AI summary or at least allow you to easily turn it off permanently as DuckDuckGo does.

@emilymbender say it louder for those in the back!!

@emilymbender we're living through a mass psychological engineering campaign and the results have been, and will continue to be, horrifying https://azhdarchid.com/are-llms-useful

The question is also "LLM'S are useful to whom?"

The wealthiest seem overjoyed with it so far.

So much so, they are funding one of the largest coercive & forced user adoption campaign in history.

It's the best at:

1. Election interference

2. Malign influence campaigns

3. Automated cyberwarfare

4. Manipulation of public sentiment

5. Automated hate campaigns

6. Plausible deniability for funding a fascist movement

7. Frying the planet