@bagder @kkarhan @undead @Ember fully agree. Everyone will outsource the monkey work to coding agents.

You should have dev backgrounds - then you do the concept, AI does the implementation, you do QA. No difference to software outsourcing projects. If step 3 is not stable, you are lost now already.

The turnaround times get faster. If you dont do it, others will just use AI to reimplement your product plus the features on top 🤷🏼♂️

@bagder

That is defeatist thinking and a fallacy.

Yes, the slop is pushed down hard, but the answer is not to lie down and take it.

The KeePassXC account boosting your toot feels like they took it as getting a blessing or a pass.

Also limiting the risk to code and skipping over the environmental and societal risks is limiting the scope of harm AI brings.

A primer for the “AI is here to stay” argument: https://www.youtube.com/watch?v=306W5Nqnbbs

@bagder @kkarhan @undead @Ember btw, I once tried to fix a bug in Mutter with the help of Copilot.

I tried to provide as much context as possible, (e.q. content of all the commits up to the point where the suspected bug was introduced, Gitlab issues), some manual tweaking later...

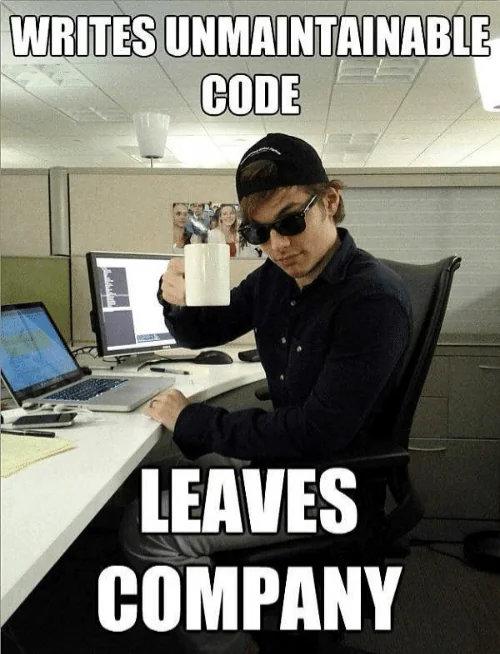

It fixed a bug, by making a patch with adding ~20, modifying ~20 lines. But, luckily, before this PR got to code review stage, another developer fixed it by understanding the issue, it was something like +-2 lines of code).

And later I was fixing another bug, in gnome-shell, this time without AI. And the most complex part of the bugfix was not to write the code, but to pinpoint the conditions when it happens. And understand the issue. After that, fixing a bug was a piece of cake.

@bagder @kkarhan @undead @Ember

I don't think this is a good strategy. We shouldn't assume people will try to circumvent any ban. I grant that a direct confrontational ban might not be the ideal strategy, but it will still remove some AI slop that an open policy wouldn't. Not everyone who wants to contribute with AI is someone who cynically wants code in the project for whatever reason, no matter how. And AI is and will almost certainly continue to be a huge risk. The risk isn't just in the technical quality of the work, but in who's responsible and who's getting access to the code. We shouldn't assume code review will catch problematic things, and AI access isn't just the same as access by low (or high) skill contributors.

We also should push back on AI contributions just because AI is bad. Here, I mean the current "system" of commercial generative AI. It is just directly bad and causes harm, and risks much more harm. Much of that is just due to general problems in society but a lot of it is specific to current AI trends and companies.

@anselmschueler @kkarhan @undead @Ember I did not say that we should assume people will circumvent bans, even if I do believe a certain amount will when the ban is vague and next to impossible to enforce.

I'm saying a large enough share of users will use AI to write code that saying no to them will make you decline a large chunk of contributors. But sure, that's up to every project to do.

@bagder @anselmschueler @undead @Ember I'd rather choose quality over quantity as a matter of principle.

- If banning " #AI" will result in less code and less contributions then I feel that's a sacrifice worth to be done.

If I wanted to " #MoveFastAndBreakThings" I would've already chosen so...

@bagder @anselmschueler @undead having a “the contributor is responsible for all code, ai generated or not, that they contribute” is a much more sensible and reliable policy .