In case you needed another one, I just found a great example why you shouldn't use LLMs for researching stuff.

I came across a citation of an article in a journal that seems a little ... off.

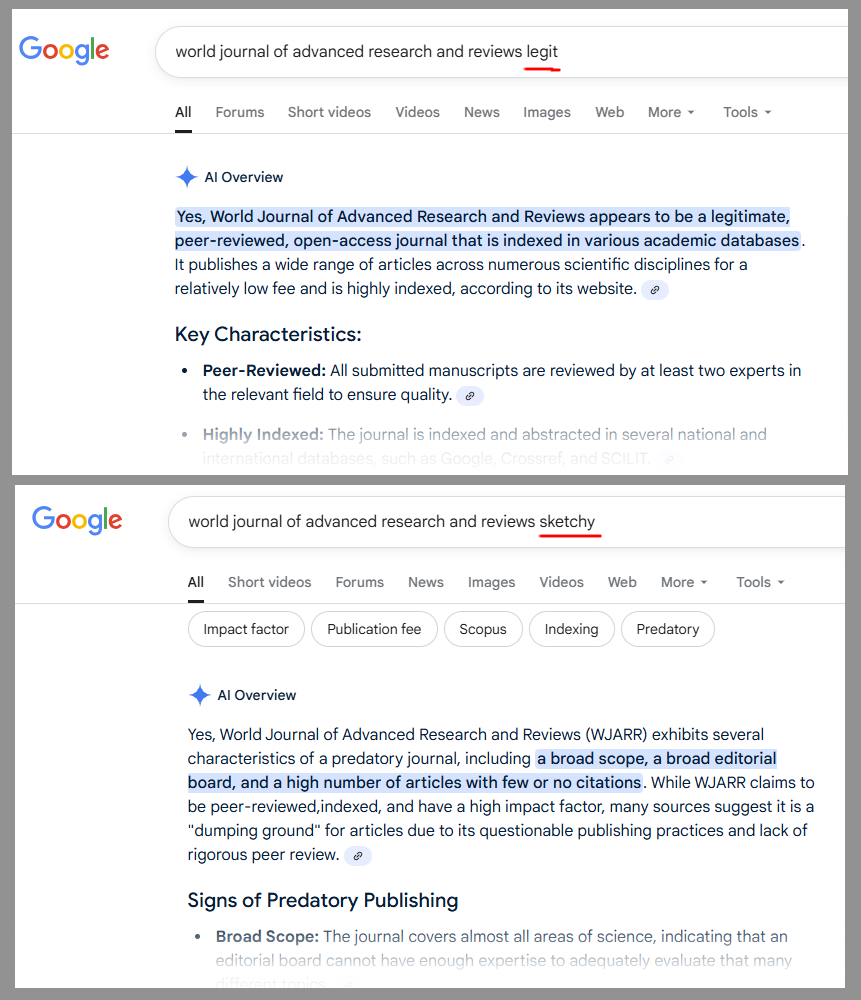

First I googled "[name of journal] legit" and the "AI overview" confidently confirmed "Yes, that seems to be a legitimate journal, and here's why."

Then I googled "[name of journal] sketchy", and the "AI Overview" confidently confirmed "Yes, that seems to be a predatory paper mill, and here's why."