A bit of a longer post to hopefully come (but I AM bad at Task, and currently behind on many things) but I think there's a widespread antipattern in UI presentation of calling a functionality (especially safety functionality) what the user WANTS rather than what it DOES.

I think this is especially... not "dangerous" exactly, but more impactful -- in federated/distributed networks, where there isn't a Single Platform/Owner able to play god with the single database (and with a stronger ability to pretend to play god with every user's endpoint).

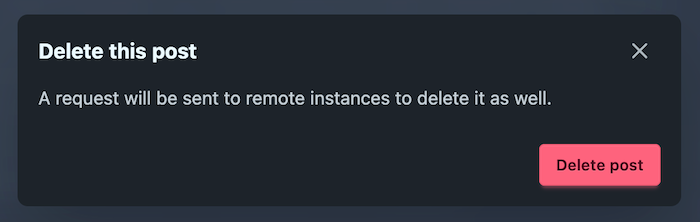

Iike, "Delete Message", e.g. I think should be called "REQUEST Delete" -- it relies fundamentally on a basic minimal level of politeness from instances (federated) or individual endpoints (E2E chat).

A lot of these are "request"s, but it gets more obviously complicated when you add it in explicitly.

Like, "block user" -> "request block user".

Who are we Requesting things of? That is, who are we trusting? And who hears of this request?