I was talking to someone yesterday (let's call them A) and they had another "AI" experience, I thought might happen but hadn't heard of before.

They were interacting with an organization and upon asking a specific thing got a very specific answer. Weeks later that organization claimed it had never said what they said and when A showed the email as proof the defense was: Oh yeah, we're an international organization and it's busy right now so the person who sent the original mail probably had an LLM write it that made shit up. It literally ended with: "Let's just blame the robot ;)".

(Edit: I did read the email and it did not read like something an LLM wrote. I think we see "LLM did it" emerging as a way to cover up mistakes.)

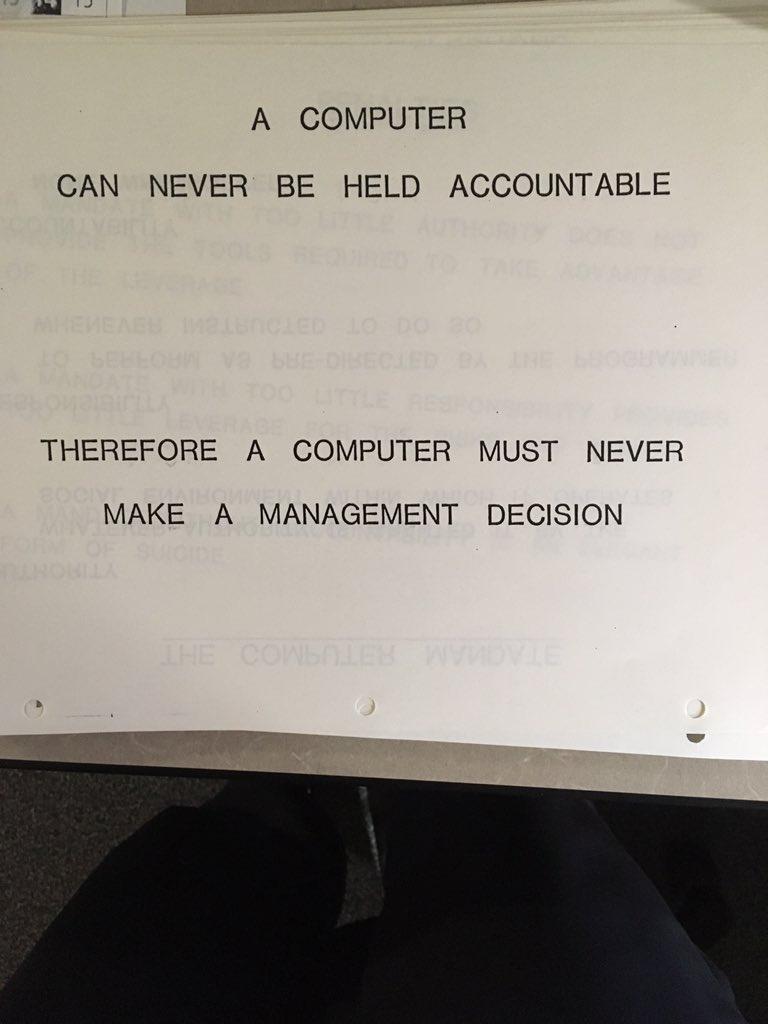

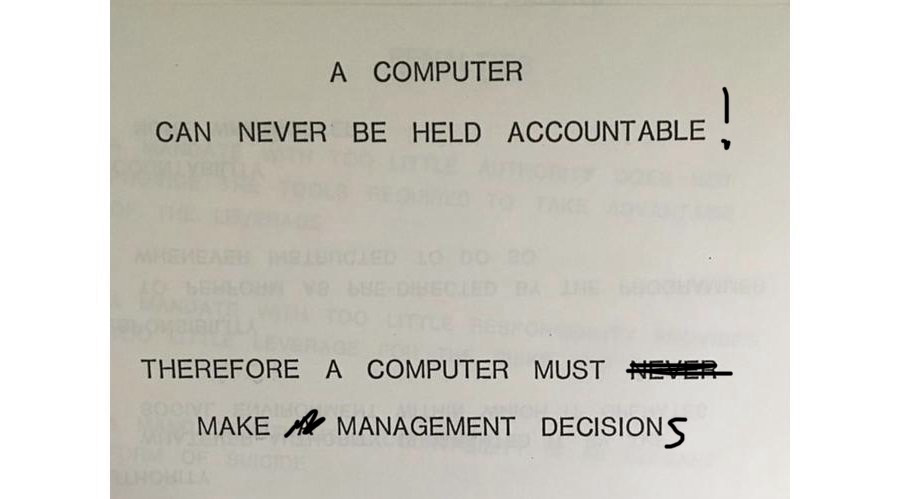

LLMs as diffusors for responsibility in corporate environments was quite obviously gonna be a key sales pitch, but it was new to me that people would be using those lines in direct communication.