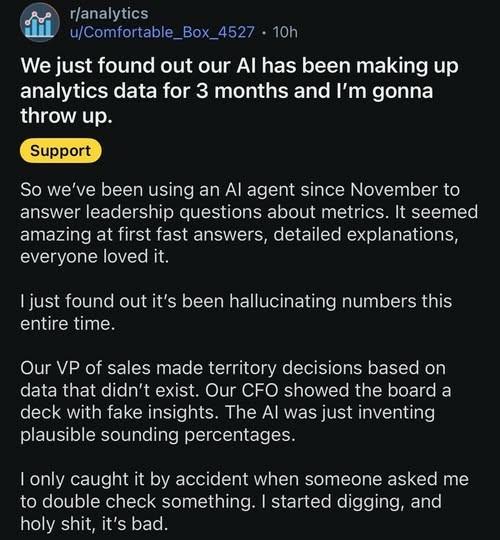

"I just found out that it's been hallucinating numbers this entire time."

Discussion

Loading...

No one should be surprised.

It is mathematically impossible to stop an LLM from “hallucinating” because “hallucinating” is what LLMs are doing 100% of the time.

It’s only human beings who distinguish (ideally) between correct and incorrect synthetic text.

And yet, it’s like forbidden candy to a child. Even well educated, thoughtful people so desperately want to believe that this tech “works”