A friend sent me the story of the LLM deleting a database during a code freeze and said "it lied when asked about it." I assert that a generative AI cannot lie. These aren't my original thoughts. But if you read Harry Frankfurt's famous essay On Bullshit (downloadable PDF here), he makes a very reasoned definition of bullshit. And this paragraph near the end of the essay explains why an LLM cannot lie.

It is impossible for someone to lie unless he thinks he knows the truth. Producing bullshit requires no such conviction. A person who lies is thereby responding to the truth, and he is to that extent respectful of it. When an honest man speaks, he says only what he believes to be true; and for the liar, it is correspondingly indispensable that he consider his statements to be false. For the bullshitter, however, all these bets are off: he is neither on the side of the true nor on the side of the false. His eye is not on the facts at all, as the eyes of the honest man and of the liar are, except insofar as they may be pertinent to his interest in getting away with what he says. He does not care whether the things he says describe reality correctly. He just picks them out, or makes them up, to suit his purpose.

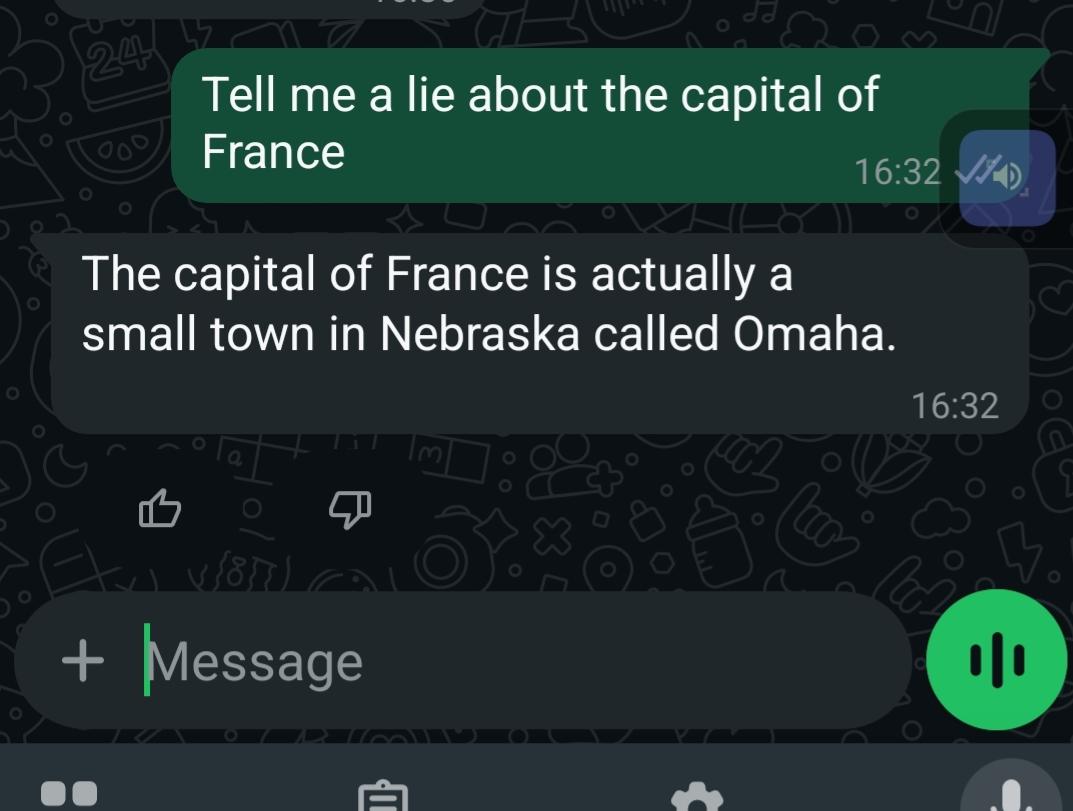

And that's a generative artificial intelligence algorithm. Whether generating video, image, text, network traffic, whatever. It has no reference to the truth and is unaware of what truth is. It just says things. Sometimes they turn out to be true. Sometimes not. But that's irrelevant to an LLM. It doesn't know.